A common language to describe and assess human-agent teams

Using a new taxonomy, an analysis of testbeds that simulate human and autonomous agent teams finds a need for more complex testbeds to mimic real-world scenarios.

Using a new taxonomy, an analysis of testbeds that simulate human and autonomous agent teams finds a need for more complex testbeds to mimic real-world scenarios.

Experts

Doctoral Student of Industrial and Operations Engineering

Understanding how humans and AI or robotic agents can work together effectively requires a shared foundation for experimentation. A University of Michigan-led team developed a new taxonomy to serve as a common language among researchers, then used it to evaluate current testbeds used to study how human-agent teams will perform.

“Our goal was to bring structure to a rapidly growing and fragmented research area. Without a comprehensive review, research synthesis has been very difficult and has prevented the field from moving forward,” said Xi Jessie Yang, an associate professor of industrial and operations engineering, robotics and information at U-M and corresponding author of the study published in Human Factors.

The study was funded by the National Science Foundation and the Air Force Office of Scientific Research.

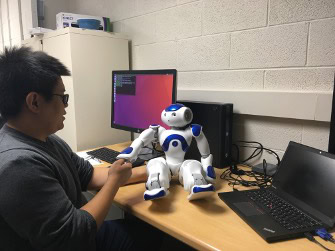

In human-agent teams, also known as human-machine teams, at least one human works with one agent, either virtual or embodied (i.e., robotic), to accomplish a common goal. The partnership could be as simple as a human working with a robotic arm to assemble a car door to a frame. Or it could be more complex, as with one human giving tactical instructions to a group of embodied AI agents in a search and rescue mission.

“To design AI or robotic teammates that are truly effective, we need testbeds that reflect the messy, dynamic nature of real teamwork. Our taxonomy provides a roadmap for future research to get there,” said Hyesun Chung, a doctoral student of industrial and operations engineering at U-M, Barbour Fellow and lead author of the study.

Just as a taxonomy is used in biology to organize living things into groups and help scientists communicate clearly with one another, this taxonomy aims to create a shared language to guide future human-agent team research. The taxonomy classifies how teams are structured and how they function, using ten attributes:

Beyond improving communication between researchers, the taxonomy can also help researchers identify which attributes to incorporate or modify in new testbed designs or even which characteristics to build new experimental designs around.

Using these terms, the research team analyzed 103 different testbeds from 235 studies, with some testbeds used in multiple studies, while noting the task goal and overall scenario.

While 56.3% (58 cases) of the testbeds had a simple one-human, one-agent composition, only 7.8% (8 cases) involved a larger team consisting of many humans and many agents. Humans assumed leadership roles in most cases, with only two cases allowing either the human or agent to lead, and the dynamics within teams remained static over time.

Beyond categorizing existing platforms, the taxonomy offers a benchmarking tool for designing new testbeds. This study highlights the need to expand team composition, leadership structure and communication to explore more complex team dynamics between humans and agents.

This research was conducted in collaboration with the Massachusetts Institute of Technology and funded by the National Science Foundation (2045009) and the Air Force Office of Scientific Research (FA9550-23-1-0044).