Accounting for bias in medical data helps prevent AI from amplifying racial disparity

Some sick Black patients are likely labeled as “healthy” in AI datasets due to inequitable medical testing.

Some sick Black patients are likely labeled as “healthy” in AI datasets due to inequitable medical testing.

Experts

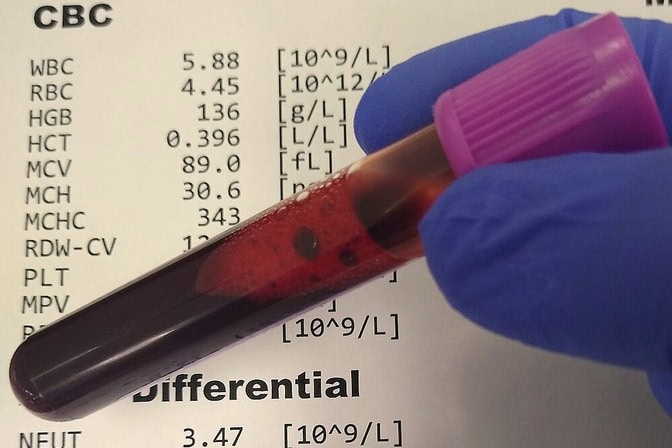

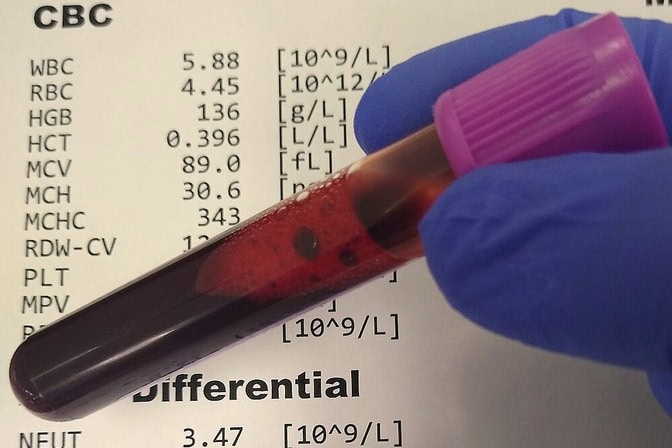

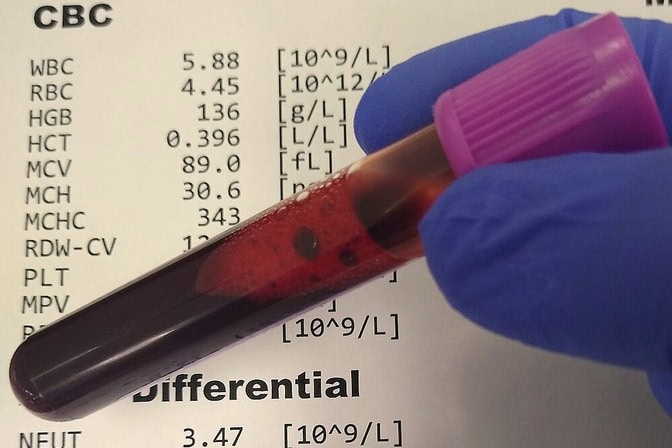

Black patients are less likely than white patients to receive medical tests that doctors use to diagnose severe disease, such as sepsis, researchers at the University of Michigan have shown.

Because of the bias, some sick Black patients are assumed to be healthy in data used to train AI, and the resulting models likely underestimate illness in Black patients. But that doesn’t mean the data is unusable—the same group developed a way to correct for this bias in data sets used to train AI.

These new insights are reported in a pair of studies: one published today in PLOS Global Public Health, and the other was presented at the International Conference on Machine Learning in Vienna, Austria, in July 2024.

In the PLOS study, the researchers found that medical testing rates for white patients are up to 4.5% higher than for Black patients with the same age, sex, medical complaints and emergency department triage score, a measure of the urgency of a patient’s medical needs. The bias is partially explained by hospital admission rates, as white patients were more likely to be assessed as ill and admitted to the hospital than Black patients.

“If there are subgroups of patients who are systematically undertested, then you are baking this bias into your model,” said Jenna Wiens, U-M associate professor of computer science and engineering and corresponding author of the study.

“Adjusting for such confounding factors is a standard statistical technique, but it’s typically not done prior to training AI models. When training AI, it’s really important to acknowledge flaws in the available data and think about their downstream implications.”

The researchers found this bias in medical testing records from two locations: Michigan Medicine in Ann Arbor, Michigan, and one of the most widely used clinical datasets for training AI, the Medical Information Mart for Intensive Care. The dataset contains the records of patients visiting the emergency room in the Beth Israel Deaconess Medical Center in Boston.

Computer scientists need to account for these biases so that AI can make accurate and equitable predictions of patient illness. One option is to train the AI model with a less biased dataset, such as one that only includes records for patients that have received diagnostic medical tests. A model trained on such data might be inaccurate for less ill patients, however.

To correct the bias without omitting patient records, the researchers developed a computer algorithm that identifies whether untested patients were likely ill based on their race and vital signs, such as blood pressure. The algorithm accounts for race because the recorded health statuses of patients identified as Black are more likely to be affected by the testing bias.

The researchers tested the algorithm with simulated data, in which researchers introduced a known bias by relabeling patients identified as ill as “untested and healthy.” The researchers then used this dataset to train a machine learning model, the results of which were presented at the International Conference on Machine Learning.

When the researcher-imposed bias was corrected with the algorithm, a textbook machine-learning model could accurately differentiate between patients with and without sepsis around 60% of the time. Without the algorithm, the biased data made the model’s performance worse than random.

The improved accuracy was on par with a textbook model that was trained on unbiased, simulated data in which everyone was equitably tested. Such unbiased datasets are unlikely to exist in the real world, but the researcher’s approach allowed the AI to work about as accurately as the idealized scenario despite being stuck with biased data.

“Approaches that account for systematic bias in data are an important step towards correcting some inequities in healthcare delivery, especially as more clinics turn toward AI-based solutions,” said Trenton Chang, a doctoral student in computer science and engineering and the first author of both studies.

The research was funded by the National Institutes of Health.

Mark Nuppnau, Ying He and Michael Sjoding from Michigan Medicine and Keith E. Kocher and Thomas S. Valley from Michigan Medicine and the VA Center for Clinical Management Research in Ann Arbor, Mich., also contributed to the PLOS study.