Augmented reality for testing nuclear components

A new machine learning platform detects and quantifies radiation-induced defects instantaneously and could be extended to interpret other microscopy data.

A new machine learning platform detects and quantifies radiation-induced defects instantaneously and could be extended to interpret other microscopy data.

A new machine vision system for testing materials and parts for nuclear reactors shows damage, such as swelling and defects due to radiation, in real time. It could speed up the development of components for advanced nuclear reactors, which may play a critical role in reducing greenhouse gas emissions to fight climate change.

“We believe we are the first research team to ever demonstrate real-time image-based detection and quantification of radiation damage on the nanometer length scale in the world,” said Kevin Field, an associate professor of nuclear engineering and radiological sciences at the University of Michigan and vice president of the machine vision startup Theia Scientific.

The interpretation technique could be adapted for other types of image-based microscopy.

“We see clear pathways to accelerate discoveries in the energy, transportation and biomedical sectors,” Field said.

Advancing nuclear energy

from discovery to deployment

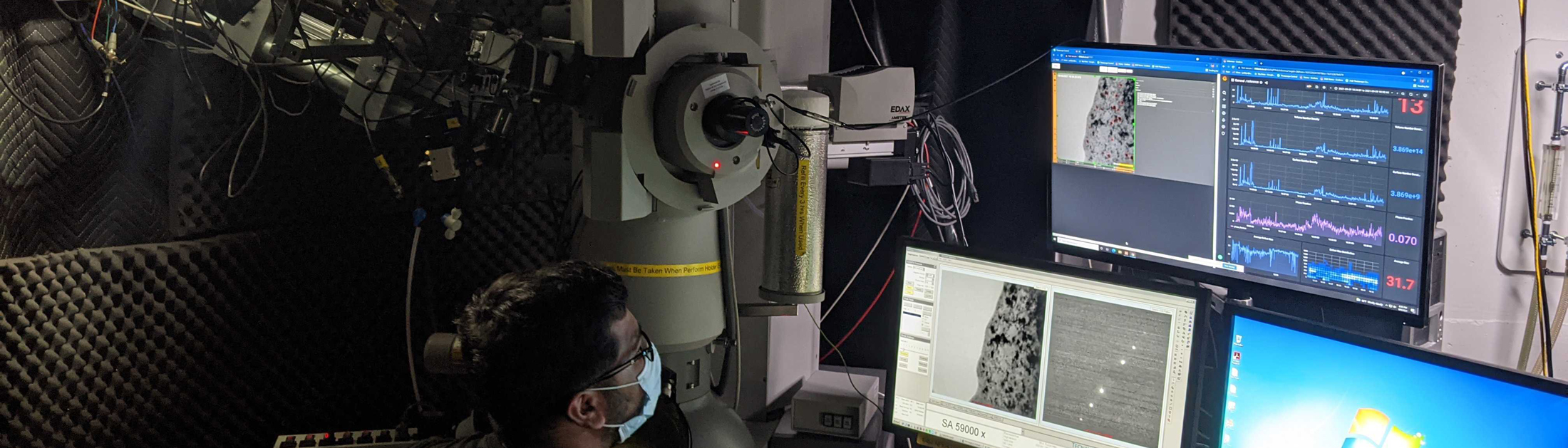

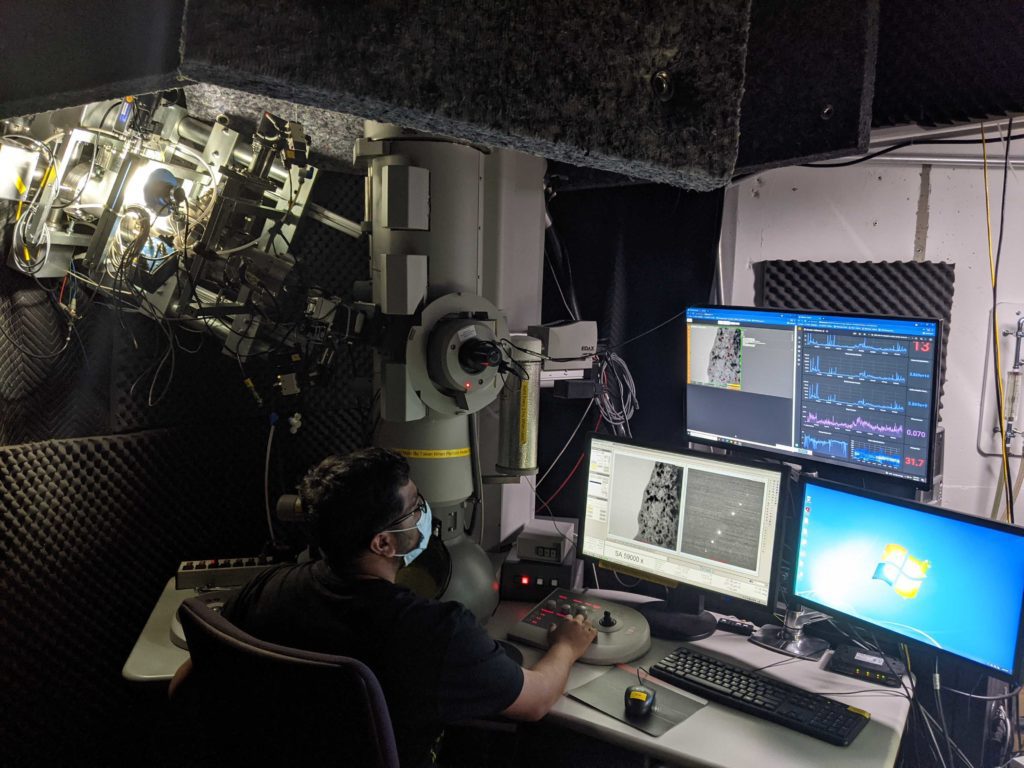

The new technology was tested at the Michigan Ion Beam Laboratory. By sending beams of charged atoms—known as ions—at material samples, the lab can quickly emulate the damage sustained after years or decades of use in a nuclear reactor. The team used an ion beam of the noble gas krypton to test a sample of iron, chromium and aluminum—a radiation-tolerant material of interest for both fission and fusion reactors.

“If radiation exposure makes your metal like Swiss cheese instead of a good Wisconsin cheddar, you would know it’s not going to have structural integrity,” Field said.

The krypton ions hitting the sample create radiation defects—in this case, a plane of missing or extra atoms sandwiched between two ordinary crystal lattice planes. They appear as black dots in the electron microscope images. The lab is able to observe the development of these defects with an electron microscope, which runs during the irradiation process, recording a video.

“Previously, we would record the whole video for the irradiation experiments and then characterize just a few frames,” said Priyam Patki, a postdoctoral researcher in nuclear engineering and radiological sciences who ran the experiment with Christopher Field, president of Theia Scientific. “But now, with the help of this technique, we are able to do it for each and every frame, giving us an insight into the dynamic behavior of the defects—in real-time.”

To assess radiation-induced defects, researchers would typically download the video, go back to the office, and count every defect in selected frames. With the hundreds, or even thousands, of images or video frames created by modern microscopes, much of the detailed information would be lost as counting the defects manually in every frame is too laborious.

Instead of counting manually, the team used Theia Scientific’s technology to detect and quantify the radiation-induced defects instantaneously during the experiment. The software displays the results in graphics overlaid on the electron microscope imagery, which label the defects—giving their size, number, location and density—and summarize this information as a measure of structural integrity.

The machine learning software uses a convolutional neural network, a type of artificial neural network well suited to interpreting images, to analyze the electron microscope video frames. The neural network achieved high speed and robust interpretation across samples of varying quality, and this in turn enabled the leap from manual interpretation to real-time machine vision.

“The real-time assessment of structural integrity allows us to stop early if a material is performing badly and cuts out any extensive human-based quantification,” Kevin Field said. “We believe that our process reduces the time from idea to conclusion by nearly 80 times.”

The project was funded by the U.S. Department of Energy Small Business Innovation Research Program (Phase 1). Theia Scientific is currently developing its proposal for Phase 2, which would enable the completion of the principal research and development efforts. The company expects preproduction units to be available in 2022.

Other collaborators on the project include Kai Sun, an associate research scientist in materials science and engineering at U-M; Fabian Naab, a research lab specialist in nuclear engineering and radiological sciences at U-M; and Dane Morgan, the Harvey D. Spangler Professor of Engineering at the University of Wisconsin.

The machine-learning algorithm relied on datasets developed at the University of Michigan, which Theia Scientific seeks to license. Kevin Field has a financial interest in Theia Scientific. Theia Scientific was co-founded in 2020 by the Field brothers.