Auto industry deadlines loom for impaired-driver detection tech, U-M offers a low-cost solution

Current technologies already in use could help prevent crashes and deaths linked to impaired driving.

Current technologies already in use could help prevent crashes and deaths linked to impaired driving.

Experts

Cameras similar to those already on newer model cars, combined with facial recognition tools, could read the “tells” of impairment in the face and upper body of a driver, University of Michigan engineers have shown.

This low-cost system could effectively detect drunk, drowsy or distracted drivers before they get on the road—or while they are on the road. A new federal requirement for all new passenger vehicles to have this safeguard passed as part of the 2021 Infrastructure Investment and Jobs Act, and the deadline could come as soon as 2026.

More than a third of deaths on the road in the United States alone are from drunk driving. The question is, can technology help solve that problem? When you drink, your blood vessels get larger and as a consequence, you get a lot more blood flow to the face, which is why your face turns red. And what we envision is that you’ve got a camera. It might be mounted on the rearview mirror when you go into the infrared where these cameras operate. It penetrates below the surface of the skin and you measure actually the blood from that blood.

We can also measure changes so we can look at heart rate. We can also look at the chest motion to look at respiratory rate. And then we can also look at your eyelids, you know, as you’re getting drowsy or you’re getting sleepy, for example, your eyes get droopy. I believe there have been studies done that showed that the technology of this kind in the vehicle, as many as 10,000 lives a year, can be saved in the United States alone. That’s a lot of lives to save and worthwhile doing.

The standards that these new safety systems will have to meet are currently under discussion, and the National Highway Traffic Safety Administration’s comment period closes March 5. While the details are up in the air, the U-M team is confident that their system can meet the new requirements in a cost-effective way.

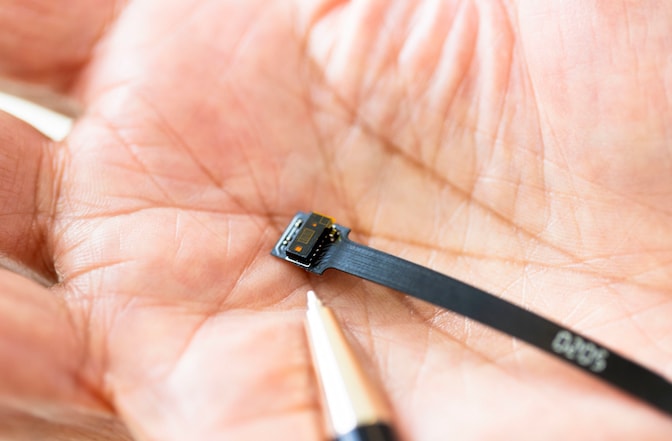

“You already see these 3D camera technologies in products like smartphones, tablets and mixed reality devices,” said Mohammed Islam, U-M professor of electrical engineering and computer science who leads the project. “And these are small, inexpensive cameras that can easily be mounted on the rearview mirror, the steering column or other places in the driver’s cockpit.

“In many new vehicles, Advanced Driver Assistant System (ADAS) cameras are already onboard to track driver alertness. They’ve already been matured and are cost effective.”

Islam’s team proposes augmenting existing ADAS cameras with infrared Light Detection and Ranging (LiDAR) or structured light 3D cameras costing roughly $5-$10. Their proof of concept experiments, which interpret data captured by the 3D cameras with artificial intelligence tools, can identify five signs that a driver may be impaired.

Blood

flow

LiDAR sees under the skin to capture increases in flow.

Increased

heart rate

It also monitors and calculates changes in heart rate.

Droopiness of

the eyelids

The system flags eyelids that droop or blink slower than normal.

Head/Body posture

It captures changes in head position and body posture.

Changes in respiratory rate

The rise and fall of the chest allows monitoring of respiratory rate.

The team demonstrated that the system can measure vital signs, detect drowsiness and provide data that correlates with breathalyzer readings. The researchers are now working with Tier 1 auto suppliers, including DENSO, and Tier 2 suppliers that make the cameras to further develop and potentially commercialize the technology.

The system is cheaper and harder to cheat than in-auto breathalyzers, which could cost as much as $200 per vehicle. A breathalyzer may be defeated by someone else performing the test on the driver’s behalf—or by opening the windows or diluting the air near the driver.

While the use of light to measure blood may raise concerns about equity after pulse oximeters were shown to give incorrect readings for patients with darker skin, the infrared light used by 3D cameras is not affected by melanin that causes skin color.

The 3D cameras also overcome two other challenges that prevent conventional cameras from performing this role effectively. By measuring only the infrared light sent out by the camera, the system can ignore changes in ambient light. In addition, the 3D camera can track the motion of the driver, preventing a different angle from looking like a change in the driver’s face.

Drunk driving remains one of the leading causes of death on America’s roads. In 2021, the most recent year data is available, NHTSA reported 13,384 deaths were linked to drunk driving—up 14% from the previous year. The agency estimates an average of 37 people die each day in drunk driving accidents.

This technology was developed with support from DENSO, Omni Sciences and the University of Michigan. Omni Sciences is a U-M startup founded by Islam, in which Islam has a financial interest. U-M is seeking partners to bring the technology to market.