Building curious machines

We know more about Mars than our own oceans and lakes. Could artificial intelligence provide answers?

Written by:

Story by Gabe Cherry, photos by Marcin Szczepanski

How could an entire commercial jetliner simply vanish? That question hung heavy in the global consciousness after Malaysia Airlines Flight 370 disappeared into the Indian Ocean on March 8, 2014. The tragedy, which took the lives of 239 passengers and crew members, is one of the most haunting aviation mysteries of all time.

The MH370 search in a remote and previously unexplored stretch of ocean took 1,046 days and cost more than $129 million. It covered an area of 46,000 square miles—larger than the state of Pennsylvania but only about 0.03% of the 139 million square miles of the planet that are covered by water.

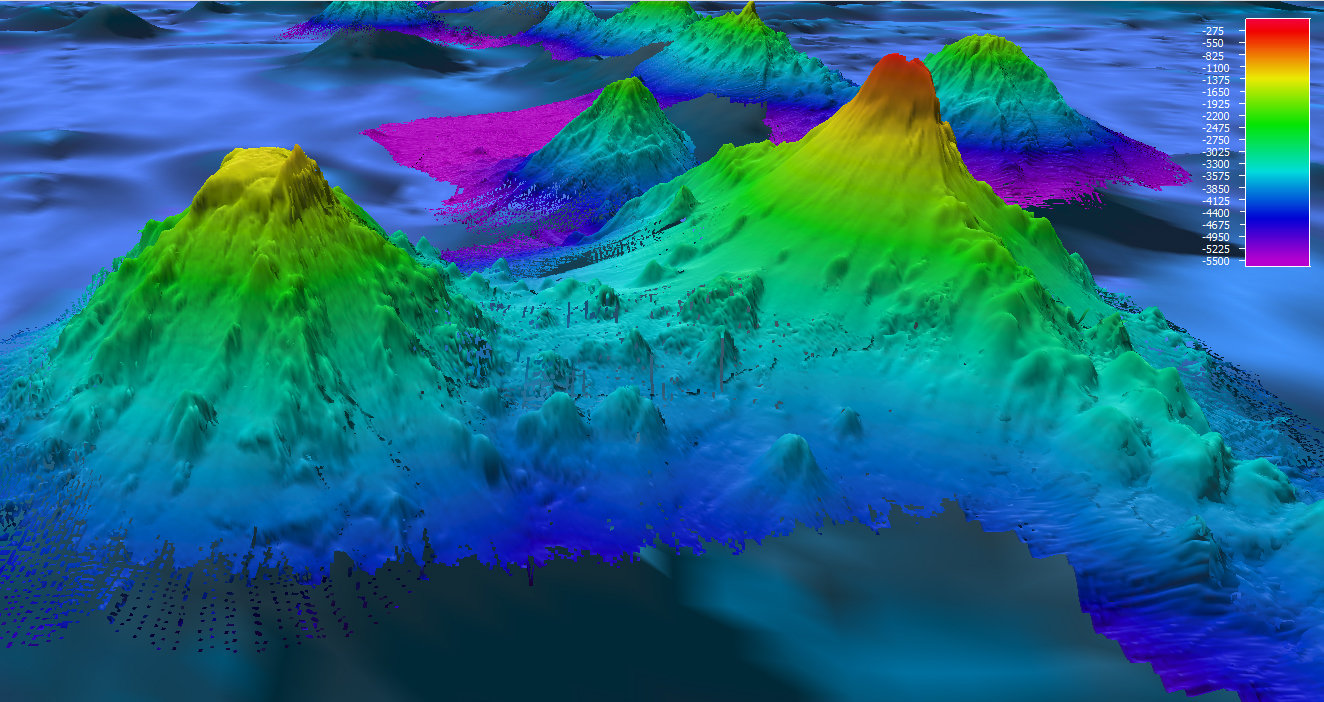

While the search didn’t locate MH370, it did stumble across a lot of unrelated but valuable information. It revealed a previously unknown underwater landscape of ridges, volcanoes, valleys, shifting tectonic plates and massive landslides. It also showed seamounts—underwater mountains that attract vast quantities of fish and other wildlife—and two previously undiscovered 19th century shipwrecks. The data will be useful to geologists, climate modelers, archaeologists and fishers for decades to come.

The MH370 search brought into stark reality just how little we know about the 71% of the Earth’s surface that is covered by water. And finding answers is more than a matter of curiosity. Larry Mayer is the co-leader of the Arctic and North Pacific Data Center of the Seabed 2030 project, an initiative that aims to map the entire ocean floor by 2030. He explains that if we want to be good stewards of the planet, we can no longer afford to ignore the parts of it that happen to be underwater.

“Seafloor mapping can help us understand ocean currents that drive our climate system by moving heat around the planet, help us understand ecosystems, help us find natural resources, help us predict earthquakes and tsunamis, and of course, any time you want to do any kind of engineering on the seafloor, you need a very accurate map,” Mayer said. “But the most exciting thing is that we just don’t know what’s there. You can’t go out and map the seafloor without finding something exciting.”

Video transcript

[visual: underwater footage of a medium-sized shipwreck with scuba divers slowly exploring it.]

Wayne R. Lusardi:

So in Michigan there are about 1,500 known shipwrecks in Michigan waters around the Great Lakes. Most of them are from the 19th century, so they’re schooners and barks and brigs and things like that, and in the turn of the century end of the 1800s early 1900s there are many more steamers and paddle wheelers, propellers, bulk freighters, package freighters.

[on screen text: WAYNE R. LUSARDI, STATE MARITIME ARCHEOLOGIST, MICHIGAN DEPARTMENT OF NATURAL RESOURCES]

[visual: scuba diver swims inside of the shipwreck and then around a large propeller.]

Narration:

Worldwide, 3 million shipwrecks are estimated to lie in the bottom of oceans and lakes. Less than one percent of these wrecks have actually been found.

[visual: scuba diver looks at the camera and points to the side.]

[on screen text: GUY MEADOWS, DIRECTOR, GREAT LAKES RESEARCH CENTER, MICHIGAN TECHNOLOGICAL UNIVERSITY]

Guy Meadows:

I’ve spent 45 years of my life looking for shipwrecks, but 98% of that time is looking at wonderful images of absolutely nothing.

[visual: screen recording of sonar imagery of the lakebed. On-board camera of IVER, a torpedo-like autonomous underwater vehicle moving along the surface of the water towards a small research boat.]

Narration:

Now, a team led by University of Michigan robotics professor, Katie Skinner, is developing technology that will explore the sea floor much as a human would. It uses artificial intelligence to scour sonar data and quickly identify areas that warrant a closer look. Initial tests are taking place on Lake Huron in Northern Michigan.

[Visual: aerial of the small boat leaving a marina. People wearing life-vests with “NOAA Great Lakes” printed on them load IVER onto the boat and climb aboard. The boat leaves the docks into open water]

Katie Skinner:

So right now we’re at the Alpena Marina. This is our kind of starting point for every mission. We can go out from here to the Thunder Bay National Marine Sanctuary. Thunder Bay has a hundred known shipwreck sites and 100, up to 100 unknown sites or undiscovered sites. We’ll start with the known shipwrecks here but hopefully we’ll discover something new too.

[on-screen text: KATIE SKINNER, ASSISTANT PROFESSOR, ROBOTICS DEPARTMENT, COLLEGE OF ENGINEERING, UNIVERSITY OF MICHIGAN]

Team member:

“So, we’re going to adjust the survey pattern to hopefully fly over both wrecks in a single underwater mission.“

[visual: a group of about six looks at a computer screen on the boat and then look out towards the water.]

Guy Meadows:

I mean the hopes and dreams is to be able to teach autonomous vehicles how to really search the seabed and to recognize the important things on the bottom for finding wrecks from all the other natural occurring things on the bottom of the water.

[visual: People on the boat carefully lower IVER into the water and it propels itself away. The on-board camera shows it moving along the surface and then diving underwater.]

Katie Skinner:

So, we just deployed the IVER for its first mission of the day. So we deployed off the side of the boat, we watch it go to its first waypoint on the surface and then it will dive down for the first leg. We programmed a lawnmower pattern, which means it will do eight different legs in kind of a lawnmower fashion for this survey.

[visual: underwater footage of the shipwreck.]

Guy Meadows:

What we’re trying to look at is, we know where the shipwreck is, this is a well known wreck, but we’re looking for clues that would teach underwater vehicles like ours to be able to find wrecks more efficiently. So we’re looking for patterns in the bottom debris that may have fallen off the ship during the time it was sinking.

[visual: IVER resurfaces.]

Narrations:

The system can look for clues in the vast amount of existing sonar data that’s publicly available. It can also help new missions find areas of interest more quickly.

[visual: Guy Meadows looks out at the water through binoculars. IVER approaches the boat. The team looks at a laptop while on the boat. Sonar imagery on the screen shows an overhead view of the shipwreck.]

Team member:

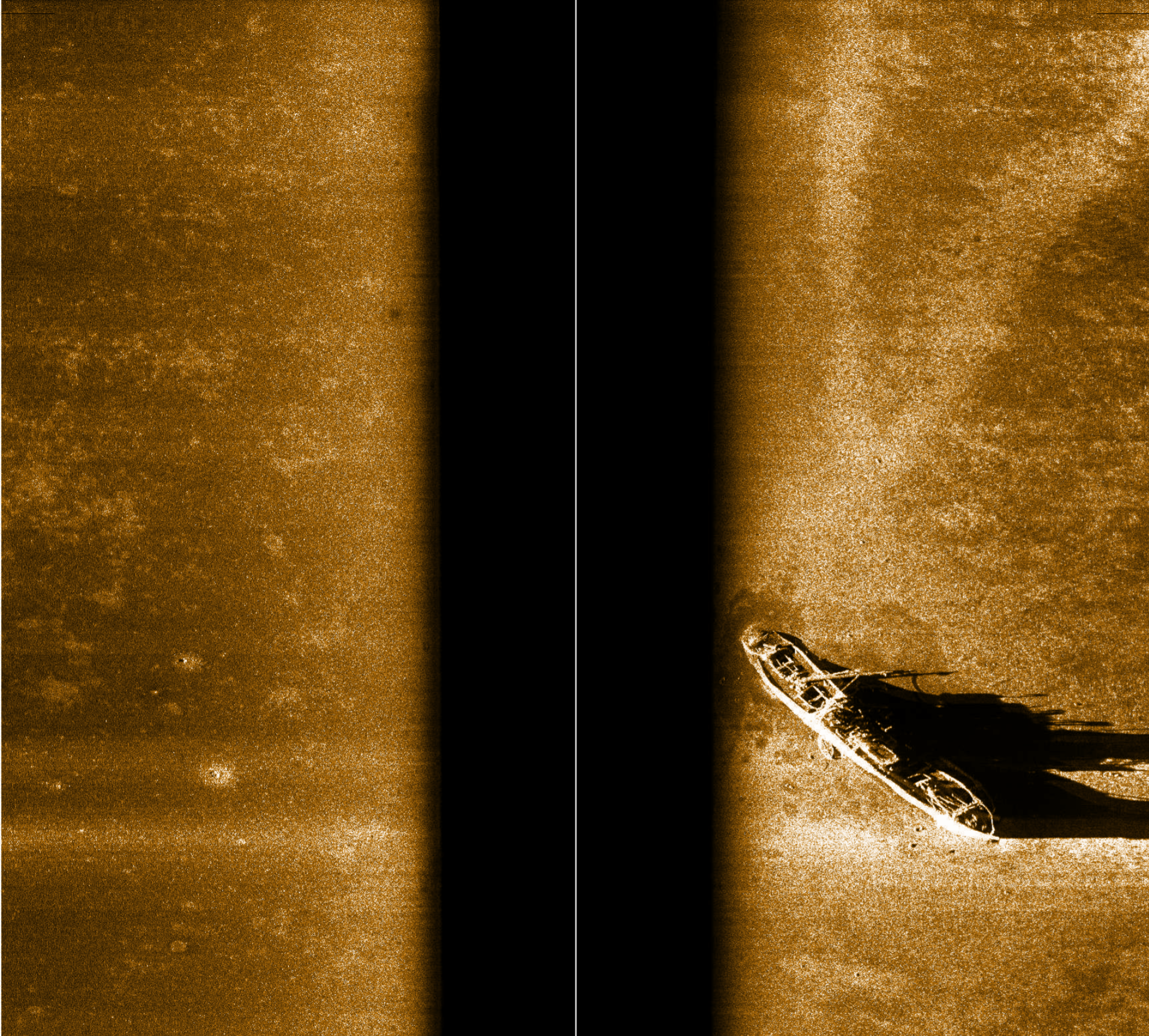

“So this is a sonar image of Lucinda Van Valkenburg Schooner here that wrecked in Thunder Bay as a result of collision, and we’re able to see kind of a sort of a planned view going right over the top of the wreck and you can kind of see the debris field at the stern. “

Narration:

While using underwater vehicles and sonar is not new, teaching computers to autonomously identify the best areas to explore is on the cutting edge of this technology.

[visual: A time-lapse of a few individuals in a conference room reviewing sonar imagery on a TV.]

Katie Skinner:

Yes, we can think about the shipwreck detection problem similar to anomaly detection, so we want to give the system a lot of experience of seeing large amounts of data that doesn’t necessarily have shipwrecks it’s just about the general terrain and this allows it to learn what those features are in the general terrain so when it comes across new features like shipwreck sites or other archaeological sites of interest it can recognize that that is something that it hasn’t really seen before.

[visual: the group is on the boat and they bring IVER back aboard.]

Conversation on boat:

“AV just popped up over here.”

“Yeah I think we’re gonna pull it in.”

“Yep we’re gonna pull in IVER.”

Team member:

“Right now I’m copying some of the sonar data off the vehicle that we just recovered.“

Guy Meadows:

The problem we’re trying to solve and many people are facing this problem, Malaysian Flight 370 is a perfect example. The clues are that it was lost somewhere in the Indian Ocean, and it’s an enormous amount of territory to try and cover with sonar in order to see something like a wreck whether it’s an airplane or whether it’s a ship and the same is true here in the Great Lakes. We need to have ways to be able to refine the search to areas that are most probable to contain clues about the wreck so that is the main problem that we’re trying to solve here.

[visual: a photograph of a Malaysian Airlines aircraft. Sonar imagery of the lakebed. Underwater footage of the earlier shipwreck. The crew prepares the boat for returning to shore. Another shot of IVER diving back underwater.]

Narration:

A successful automated system could help us understand the ocean and lake floor and uncover secrets still hidden beneath the sea.

[on-screen text: Research Team: Katherine A. Skinner, Principal Investigator, Asst. Professor, University Of Michigan; Corina Barbalata, Co-Principal Investigator, Asst. Professor, Louisiana State University; Guy A. Meadows, Co-Principal Investigator, Michigan Technological University; William Ard, Masters Student, Louisiana State University; Mason Pesson, Masters Student, Louisiana State University; Advaith Sethuraman, Doctoral Student, University Of Michigan; Christopher Pinnow, Electrical & Computer Engineer, Great Lakes Research Center; Wayne R. Lusardi, State Maritime Archeologist, Michigan Dnr; This work is supported by NOAA Ocean Exploration under Award #NA21OAR0110196; “No Lives Were Lost During The Sinking Of The Schooner Lucinda Van Valkenburg In 1887.”]

[on-screen text: Filmed And Edited By Marcin Szczepanski; Associate Producers, Gabe Cherry, Jeremy Little, Nicole

Better ocean maps could, of course, help humans understand ourselves better as well. They could provide valuable clues to archaeologists who are trying to piece together a more complete understanding of human history. And they could help bring closure to families who have lost loved ones in disasters like MH370.

Seabed 2030 has more than doubled the amount of seafloor that has been mapped from less than 10% in 2017 to just over 20% today, through a combination of new missions and making existing mapping data publicly available. But all that newly available data has created a second problem: what to do with it.

The raw material of ocean mapping is sonar data. And today, that data must be analyzed by experts who spend years of their lives watching footage of the ocean floor slowly swimming by on a video screen. They’re masters at picking out telltale signs of human-made structures and other objects of interest. An oddly precise angle in a mound of sediment, a strangely shaped bit of wood or even an unusual shadow can tip them off to a major find. It’s slow work.

“You have to send down the vehicle, recover it, download the data, analyze it and then decide whether to send another vehicle down,” Mayer said. “A vehicle that could make decisions on its own, that could identify things on its own and then start doing a detailed survey, could save a tremendous amount of time and money.”

Building a robot explorer

A team led by Katie Skinner, a U-M robotics assistant professor, is working to make just such a vehicle a reality. It’s combining robotics, naval architecture and computer science to build a software system that can trawl through sonar data much as a human would. Installed on an autonomous underwater vehicle, it could do an initial scan and then dive deeper to gather more detailed data on sites of interest, combining several missions into one. The same software could also be turned loose on the sonar data collected by projects like Seabed 2030, identifying sites of interest that could inform future missions.

“It’s amazing to me that we know more about the surface of Mars and the Moon than we do about our own seabed,” Skinner said. “I hope that in 20 years we’ll see fleets of robots going out into the open sea, collecting data and informing scientists.”

To make that happen, the team will need to teach a machine how to tell a mast from a tree trunk, how to read delicate current patterns in the sand and countless other tricks that humans have learned over hundreds of years of scouring the seafloor.

So Skinner has enlisted a team of ocean researchers and divers, including Guy Meadows, Michigan Technological University senior research scientist and director of marine engineering technology at the Great Lakes Research Center; Wayne Lusardi, state maritime archaeologist at the Thunder Bay National Marine Sanctuary; and Corina Barbalata, an assistant professor of mechanical and industrial engineering at Louisiana State University.

Barbalata, an expert in robotic navigation and control, is developing systems that will help future underwater vehicles navigate previously unknown terrain in a more sophisticated way than today’s AUVs, knowing not just what to avoid, but also which objects to examine more closely.

“Today’s AUVs follow pre-set patterns, but that doesn’t work when you’re exploring an unknown environment, specifically searching for a shipwreck,” she explains. “The robot needs to be able to extract features to determine the characteristics of the object and plan a path of exploration on the spot—and do that within the limited processing power and battery life of an untethered vehicle. It’s quite a challenge.”

Meadows and Lusardi, having dedicated their lives to the sea, bring a somewhat different perspective. Meadows spent 35 years as a U-M professor of atmospheric, oceanic and space sciences and naval architecture and engineering before joining Michigan Tech in 2012 to help launch its then-new Great Lakes Research Center as its founding director. Lusardi has been diving shipwrecks and archaeological sites since he was a teenager; he spent more than 30 years exploring wrecks and archaeological sites in Illinois, the U.S. Atlantic Coast and the Caribbean before joining the Thunder Bay sanctuary in 2002.

Decades on the water have taught Meadows and Lusardi that the sheer scale of the sea is no match for humans. They hope that new technology can enable future researchers to spend less time searching and more time finding.

“I’ve spent 45 years of my life looking for shipwrecks and other cultural infrastructure on the bottom of oceans and lakes,” Meadows said. “And 98% of that time has been looking at images of absolutely nothing. I’ve been involved in discovering evidence from important events, and unfortunately, I’ve also been involved in searching the depths for bodies to provide families with closure after accidents. It would be tremendous if we could develop a machine to make that process much faster and far more efficient.”

In the broadest sense, training a machine learning system to search for shipwrecks is similar to training an autonomous car to safely drive on the streets—makers train both models by showing them images of things they’re likely to encounter out in the real world. A self-driving car, for example, might process thousands or millions of images of streets, with things like other vehicles, pedestrians and road signs labeled by humans. It might even watch footage of Grand Theft Auto.

The particulars of training an underwater search vehicle, however, are much more complicated. That’s chiefly because there just isn’t a lot of underwater imagery out there, particularly of unusual features like shipwrecks.

Fortunately for the team, there’s a massive trove of data in Lusardi’s backyard—the Thunder Bay National Marine Sanctuary. Encompassing 4,300 square miles of Lake Huron off the coast of northeast Michigan, it contains nearly 100 known shipwrecks and is estimated to hold as many as 150 more undiscovered wrecks.

On a series of missions to the sanctuary, Skinner’s team is deploying an IVER, a torpedo-shaped autonomous underwater vehicle provided by Michigan Technological University, to capture side-scan sonar imagery. They’ll use the data to train their model and perhaps even discover a new wreck or two in the process.

They’re also building synthetic data—artificially created models of shipwrecks and underwater terrain that will further hone the system’s skills. They hope to bring a prototype system back to the sanctuary in the summer of 2023 to test.

Heading out to sea

The initial expedition was just ramping up on May 24, 2022. Filmy, early-summer sunlight coated the Alpena Marina’s empty docks and silent fish cleaning station as Skinner prepared to lead Meadows, Lusardi and four other researchers out to the Thunder Bay sanctuary on a 50-foot NOAA research ship.

“Who’s the chief scientist on this mission?” Randy Gilmer, captain of the RV Storm research vessel, asks the team of researchers gathered in a semi-circle on the dock.

“I am,” says Skinner, raising a pink nail-polished hand. The trip marks the beginning of the first field expedition of the two-year project, which is Skinner’s first major research endeavor as a professor. She joined U-M as an assistant professor in 2021 after completing her master’s and PhD in robotics at U-M, followed by a stint as a postdoctoral researcher at the Georgia Institute of Technology.

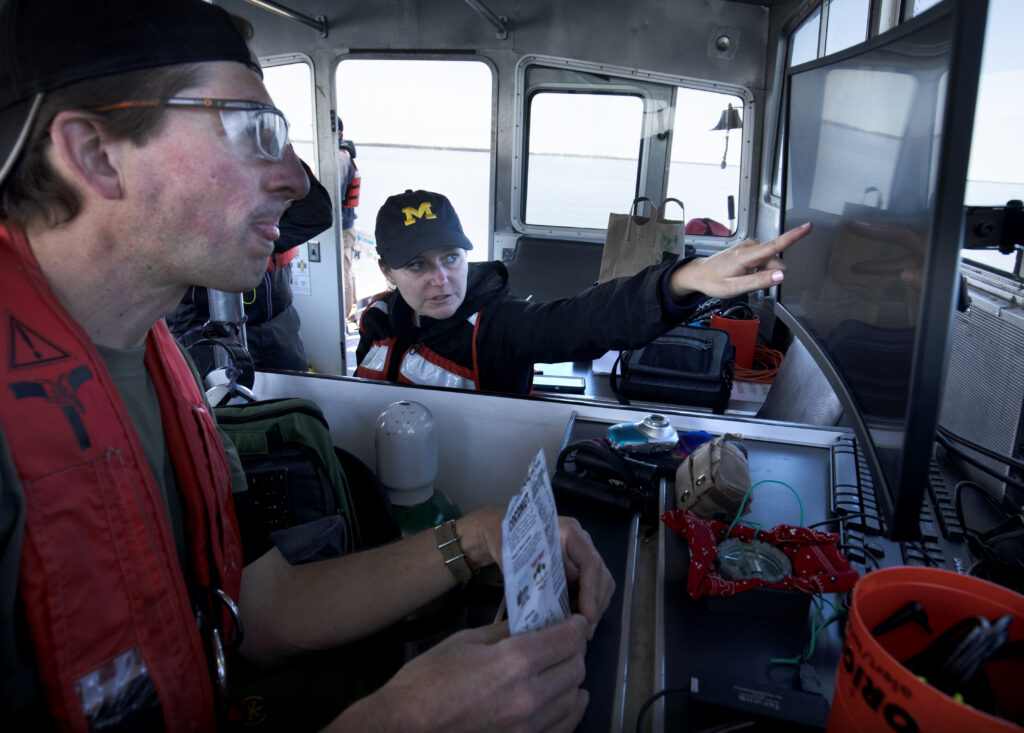

Leading a research expedition was just one challenge in a year that included securing funding and assembling a team, not to mention preparing to teach a new undergraduate course in the robotics program during a global pandemic. Today, she’s the only woman on the boat. But she’s used to it. Skinner knows exactly why she’s here.

“I draw from the excitement of the work I’m doing—that’s why I do this job,” she said. “I’ve really grown a lot in the last couple of years, learning to be flexible and confident about making decisions based on the data that’s available. We make decisions as a group, but ultimately, I need to be able to say, ‘This is a plan that I’m happy with. Let’s go.’”

As they load gear onto the ship, the air is heavy with the boiled-wood smell of a paneling factory nearby. Alpena made its name in the 19th century timber trade, but today it’s a blue-collar town that manufactures wood and concrete products. Its prime location on Lake Huron also draws tourists to its beaches and the Thunder Bay sanctuary in summer.

The treacherous coastal waters that are now part of the sanctuary earned the title of “Shipwreck Alley” during the 19th century heyday of Great Lakes shipping, when a steady stream of steamers ferried cargo like iron ore, limestone, timber, coal and grain to growing cities such as Detroit, Alpena, Green Bay and Chicago.

In fact, though, it’s likely that the entire Great Lakes region is a vast graveyard of undiscovered shipwrecks. In his 1899 book “History of the Great Lakes,” J.B. Mansfield includes data that suggest a staggering 5,999 shipwrecks in the Great Lakes between 1878 and 1897. Since ships only operated for around eight months per year, it stands to reason that one to two ships were lost each week during the shipping season.

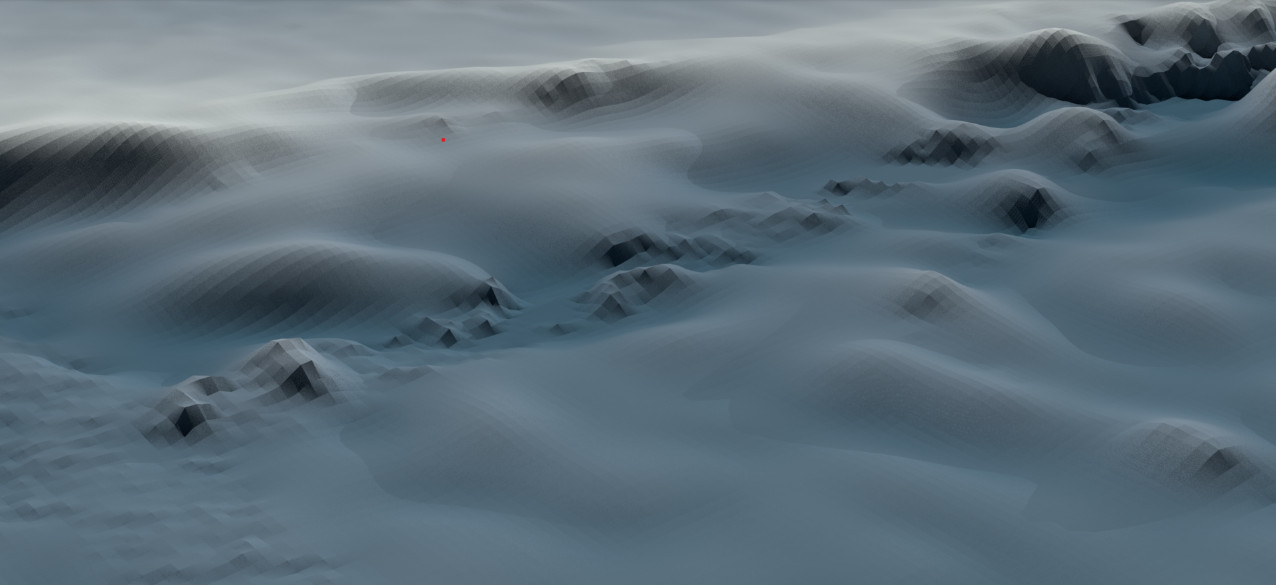

The floor of the Great Lakes is nearly as mysterious as the remote stretches of the Indian Ocean where MH370 was lost—even within the boundaries of the Thunder Bay sanctuary, only about 16% of the underwater landscape has been mapped in detail.

“Technology like this can be a game-changer,” said Jeff Gray, the superintendent of the Thunder Bay sanctuary. “Mapping is time-consuming and expensive but critical to understanding the Great Lakes and their rich history. This technology can help make that work more effective and efficient and will help protect the Great Lakes for future generations.”

The Storm is heading toward the wreck of the D.M. Wilson, a 179-foot coal hauler that sprang a leak and sank in 1894. While the Wilson’s crew was promptly rescued, the ship itself remains largely intact to this day—the cold, fresh water here preserves shipwrecks and other artifacts in remarkably good condition.

Huddled around Skinner’s laptop, the team is making last-minute tweaks to the path that the IVER will take as it scans the wreck. Their goal is to run the IVER at a depth that will capture a wide swath of imagery without giving up too much detail, while maximizing the vehicle’s limited battery life and minimizing the risk of a collision with the wreck.

Meadows and his graduate student Christopher Pinnow, also on the ship, are experts on the ins and outs of the IVER. Lusardi has dived the wreck countless times over the years and knows every inch. But the buck stops with Skinner, poring over her laptop. She considers the group’s input and, after a few minutes of concentration, gives Pinnow the tweaks to the path he’ll program into the IVER.

As they approach the wreck, Advaith Sethuraman, a graduate researcher in Skinner’s lab, carefully unstraps the IVER from its cradle. After being released from the ship, it will dive underwater and travel in a pre-set back-and-forth pattern called a “lawn mower,” capturing side-scan sonar imagery of the D.M. Wilson for around 20 minutes before bobbing back to the surface.

Tall with a thick beard, green-framed eyeglasses and red ruby earrings, Sethuraman’s job on the ship is to help handle the IVER and shepherd the data it captures. One of his chief tasks on the larger project is to figure out how to generate the artificial data that will help train the model. It’s an engineering puzzle that borders on the philosophical: What is the essence of a shipwreck?

“For example, we know that shipwrecks in the Great Lakes have a lot of mussels growing on them, so I could add noise to the synthetic data that looks like mussels,” he said. “But as soon as I introduce the human idea of, ‘This is a mussel,’ I’m telling the system what to look for, and that could reduce its ability to generalize to other objects of interest that might not have mussels. What we want is for the system to figure out on its own what’s important—and that could be a feature or a pattern that we aren’t even aware of. It’s a way to build systems that are smarter than we are.”

They aim to develop a more sophisticated type of machine learning system called a “deep neural network.” Instead of simply learning from data that has been labeled by humans, the system can make new connections, learning to detect anomalies that it didn’t encounter during training. This will enable it to learn from a much smaller amount of data and ultimately be more adaptable to varying conditions.

After loading the route plans from their laptops onto the IVER through a cable, Sethuraman and Lusardi carry it to the back of the ship. Launching it is a delicate operation—the two men work together, crouching over the rear of the boat and slowly but steadily lowering it into the water. The calm conditions make this step a bit less dicey, reducing the risk that a wave will smash the IVER against the boat or snatch it out of the researchers’ hands.

For Lusardi, developing the next generation of technology is about much more than new algorithms and the excitement of discovery.

A lifetime exploring the aftermath of disaster has made him keenly aware of the human toll of maritime tragedies.

Technology that heals

Over the past several years, Lusardi has expanded the scope of his research to the thousands of aircraft that have been lost in the Great Lakes and other U.S. waterways. By tracking down and diving wrecked airplanes, he’s able to find answers and provide a sense of closure to the families affected by the crashes. The wrecks he has researched, mostly military planes and private, single-engine craft, are far smaller than MH370. But to the families of their pilots, crew and passengers, the closure they provide is just as meaningful.

“I know of about 1,100 airplanes that have crashed in the Great Lakes, and there’s just something about those planes,” he said. “I feel a connection with the people who fly them.”

Lusardi is confident that systems like the one Skinner’s team is developing will one day provide more answers to more families and ultimately make both shipping and aviation safer. But today, he also has a more immediate goal in mind.

Not far from where the crew is preparing to launch the IVER, a single-engine private plane is believed to have crashed in the 1960s, killing all four people on board. The victims were recovered, but the aircraft itself has never been found. Right now, the wrecked plane is somewhere beneath Lusardi’s feet. So there’s a chance—a small one—that when the IVER surfaces, it could be carrying the first-ever images of the downed craft.

“I’ve been in touch with dozens of sisters and sons and daughters and wives of missing flight crews, both civilian and military,” he said. “They’re powerful stories, and they really want answers. I think this technology can help provide some of that. I really think it can.”

He watches as the IVER glides away from the stern of the boat. Trailing delicate ripples in the glassy water, it shrinks toward the horizon before turning and disappearing beneath the surface of the lake.

This research was funded by NOAA Ocean Exploration.

Deep secrets

The tools we use to map the seafloor

After thousands of years of human exploration, how can so much of the underwater world still be unknown? The simple fact is that, until surprisingly recently, there was simply no good way to map out large swaths of the seafloor.

Satellite altimetry provides us with a complete map, but its low resolution can only offer vague contours of the seafloor. Sonar can give us a more detailed view, but it’s estimated that it could take a single ship and its crew up to 1,000 years to map all of Earth’s oceans with modern sonar.

Here’s a guide to ocean mapping technology over the years.

Satellite altimetry

Even on the calmest day, the surface of the ocean is not flat. Because of variations in gravitational pull, it undulates slightly, following the contour of the seafloor below. Satellite altimetry, invented in the 1980s, can observe these variations and piece together a complete—but very rough—map of the sea bottom.

The first satellite altimetry maps of the ocean floor couldn’t detect any objects smaller than about 20 miles across. Even today, the best satellite altimetry maps have a resolution of about one mile.

Side-scan sonar

Invented around 1960, side-scan sonar uses a transducer, usually mounted to the bottom of a boat, to emit a single-frequency pulse of sound and measure what’s reflected back. Its high resolution makes it useful for getting detailed images of shipwrecks and other known objects on the seafloor. However, its ability to measure depth is very limited, making it most useful as a two-dimensional imaging tool.

The frequency of side-scan sonar can be adjusted for varying sea conditions; lower frequencies can penetrate deeper water than higher ones but have a lower resolution. Side-scan sonar resolution varies widely, but it can provide resolutions of less than an inch at close range.

Multibeam sonar

Multibeam sonar systems became popular in the 1990s and are now the industry standard for ocean exploration. Instead of side-scan sonar’s single frequency, multibeam sonar sends out a series of fan-shaped soundwaves at varying frequencies. The system can scan a swath up to five miles wide, far wider than side-scan, and it can also take three-dimensional measurements.

While multibeam sonar can’t match the resolution of side-scan sonar, its ability to scan in three dimensions makes it a more versatile tool for mapping the underwater world. Its digital data also lends itself to colorful, computer-generated visualizations that can convey detailed information to researchers. Multibeam sonar can achieve resolutions as fine as about one yard.