Individual finger control for advanced prostheses demonstrated in primates

An electrode array implanted in the brain predicts finger motions in near real time.

An electrode array implanted in the brain predicts finger motions in near real time.

In a first, a computer that could fit on an implantable device has interpreted brain signals for precise, high-speed, multifinger movements in primates. This key step toward giving those who have lost limb function more natural, real-time control over advanced prostheses—or even their own hands—was achieved at the University of Michigan.

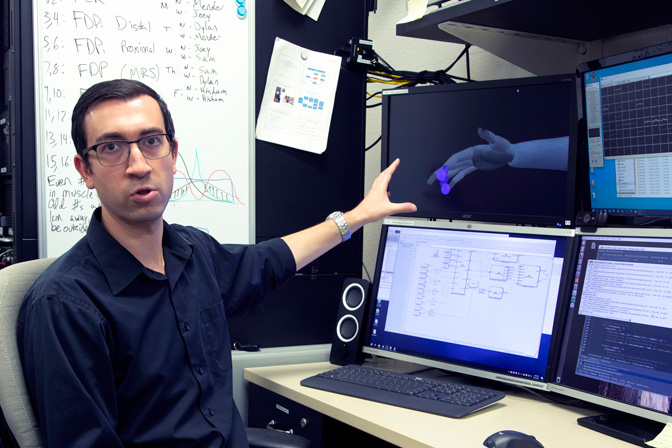

“This is the first time anyone has been able to control multiple fingers precisely at the same time,” said Cindy Chestek, an associate professor of biomedical engineering. “We’re talking about real-time machine learning that can drive an index finger on a prosthesis separately from the middle, ring or small finger.”

Brain/machine interfaces capable of providing real-time control over a variety of high-tech gadgetry is under development by a variety of interests, from government institutions such as DARPA to private ventures such as Elon Musk’s Neuralink. A major hurdle for players in the field, however, has been getting continuous brain control of multiple fingers.

So far, continuous individual finger control has only been achieved by reading muscle activity, which cannot be used in cases of muscle paralysis. And current technologies for harnessing brain signals have allowed primate or human test subjects to manipulate prosthetics with simple movements—much like a pointer or pincer.

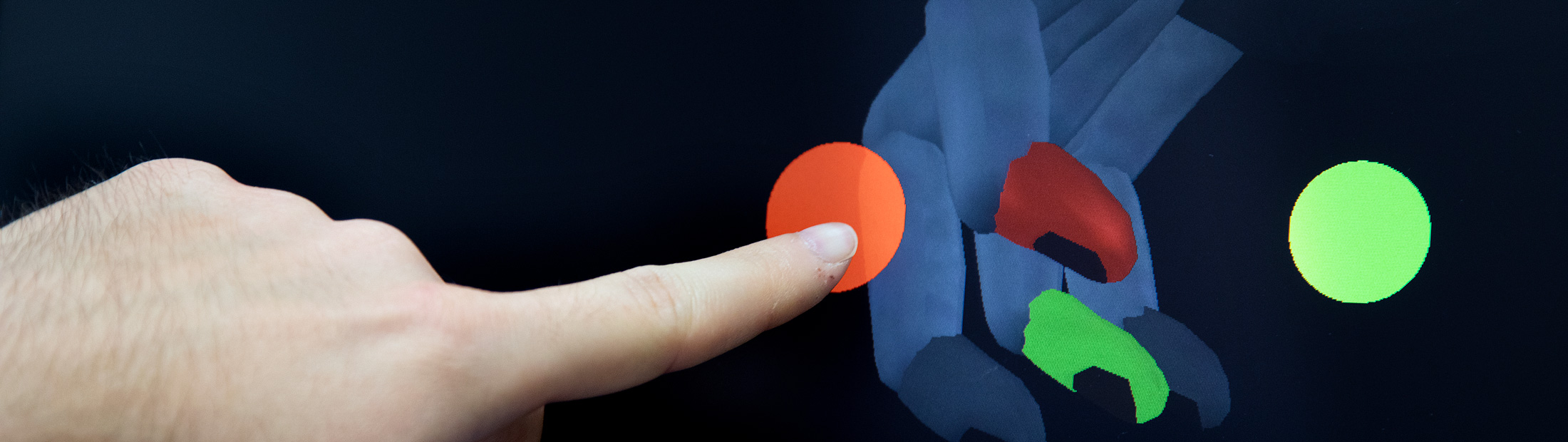

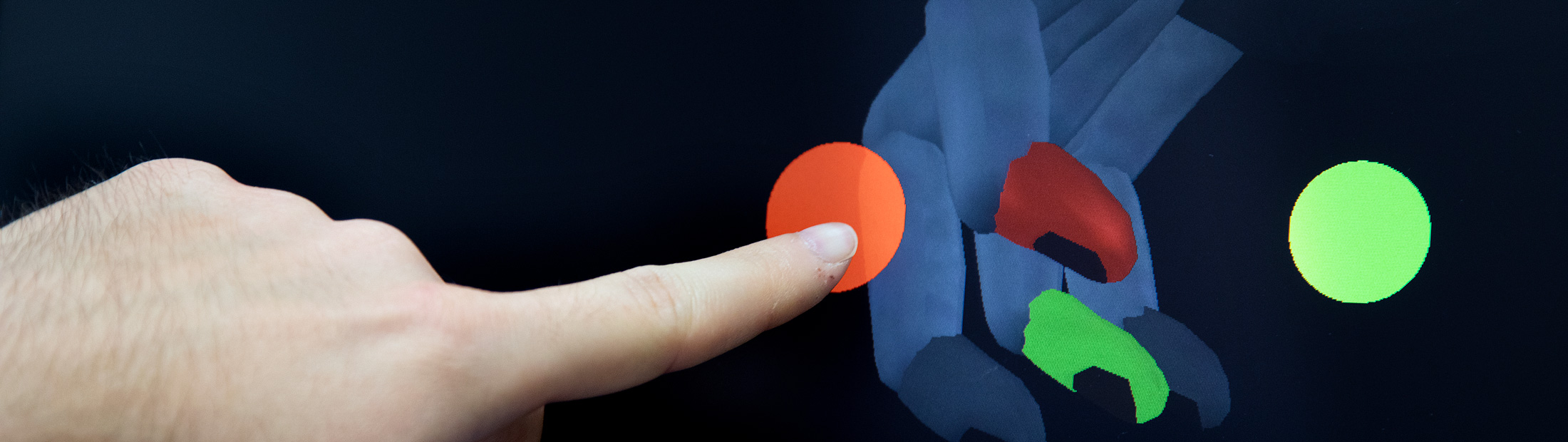

In contrast, the system developed in Chestek’s lab enabled primate subjects to create intricate movements for digital “hands” on a computer screen. The technology has the potential to benefit a variety of users suffering from paralysis, resulting from spinal cord injury, stroke, ALS or other neurological diseases.

“Not only have we demonstrated the first ever brain-controlled individual finger movements, but it was using computationally efficient recording and machine learning methods that fit well on implantable devices,” said Sam Nason, a Ph.D. student in biomedical engineering and first author of the paper in the journal Neuron. “We are hoping that 10 years from now, people with paralysis can take advantage of this technology to control their own hands again using an implantable brain-machine interface.”

The system gathers signals from the primary motor cortex, the brain center controlling movement, through an implanted 4mm x 4mm electrode array. The array provides 100 small contact points in the cortex, potentially creating 100 channels of information and enabling the team to capture signals at the neuron level. Chestek says very similar implants have been used in humans for decades and are not painful.

Key to the effort was defining a training task that would systematically separate movements of the fingers, forcing them to move independently unless instructed otherwise. Brain activity corresponding to those movements could not be isolated without the movements themselves being isolated.

The team achieved this by showing two able-bodied rhesus macaque monkeys an animated hand on-screen with two targets, one presented for the index finger and the other for the middle, ring and small fingers as a group. The targets were colored to indicate which fingers should go to each target, allowing the monkeys to freely control the animated hand using a system that measures the positions of their fingers. They hit the targets to get apple juice as a reward.

When the monkeys moved their fingers, the implanted sensor captured the signals from the brain and transferred the data to computers that used machine learning to predict the finger movements. After about five minutes of training time for the machine learning algorithm, these predictions were then used to directly control the animated hand from the monkeys’ brain activity, bypassing any movements of their physical fingers.

With direct access to the motor cortex, the speed with which U-M’s technology can capture, interpret and relay signals comes close to real-time. In some instances, hand movements that take the monkeys 0.5 seconds to accomplish in the real world can be repeated through the interface in 0.7 seconds.

“It’s really exciting to be demonstrating these new capabilities for brain machine interfaces at the same time that there’s been huge commercial investment in new hardware,” said Nason. “I think that this will all go forward much faster than people think.”

The team is undergoing regulatory review to translate this research into clinical trial testing with humans. Those experiments could begin as early as next year.

All procedures were approved by the University of Michigan Institutional Animal Care and Use Committee. The research was supported by the National Science Foundation, National Institutes of Health, Craig H. Neilsen Foundation, A. Alfred Taubman Medical Research Institute at U-M and the U-M interdisciplinary seed-funding program MCubed.