Memory-processing unit (MPU) could bring memristors to the masses

AI, weather forecasting and data science would all benefit from computers that store and process data in the same place. Memristors could be up to the task.

AI, weather forecasting and data science would all benefit from computers that store and process data in the same place. Memristors could be up to the task.

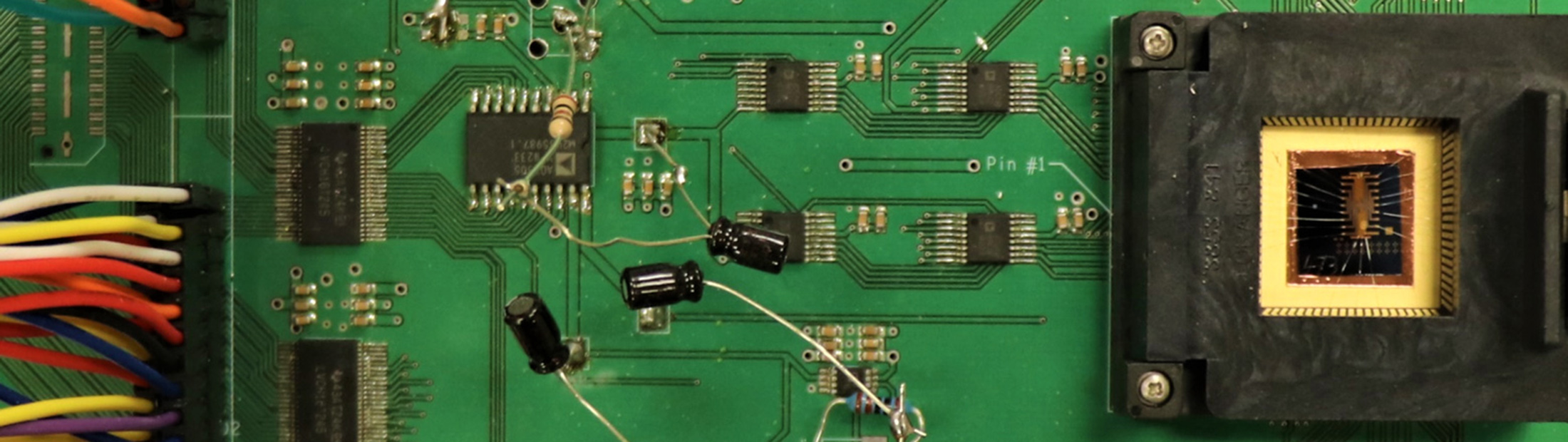

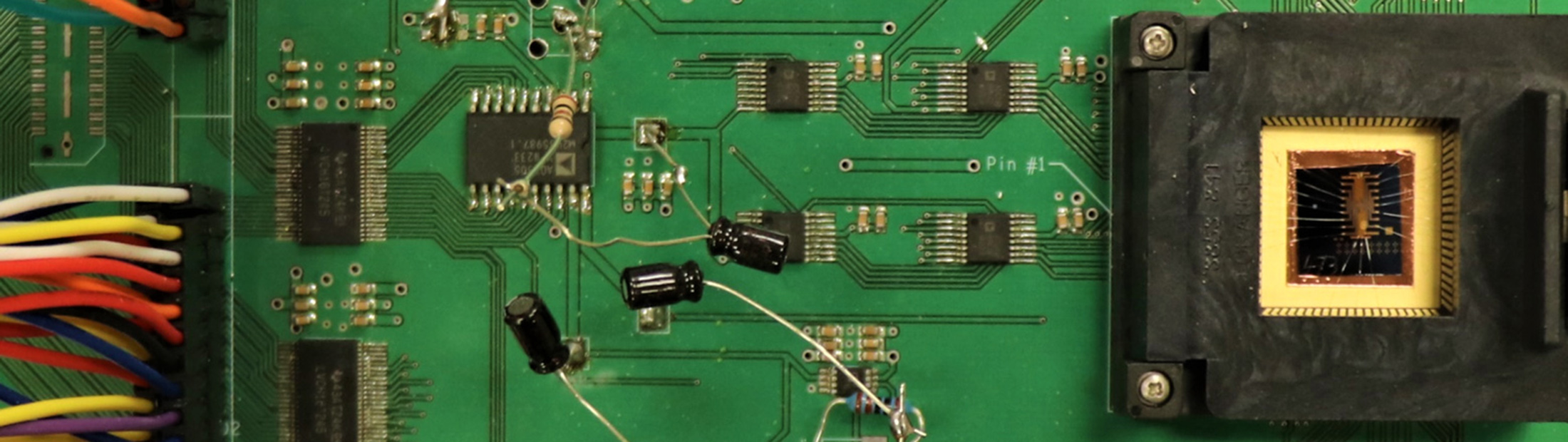

A new way of arranging advanced computer components called memristors on a chip could enable them to be used for general computing, which could cut energy consumption by a factor of 100. This would improve performance in low power environments such as smartphones or make for more efficient supercomputers.

“Historically, the semiconductor industry has improved performance by making devices faster. But although the processors and memories are very fast, they can’t be efficient because they have to wait for data to come in and out,” said Wei Lu, a professor of electrical and computer engineering at the University of Michigan and co-founder of the memristor startup Crossbar Inc.

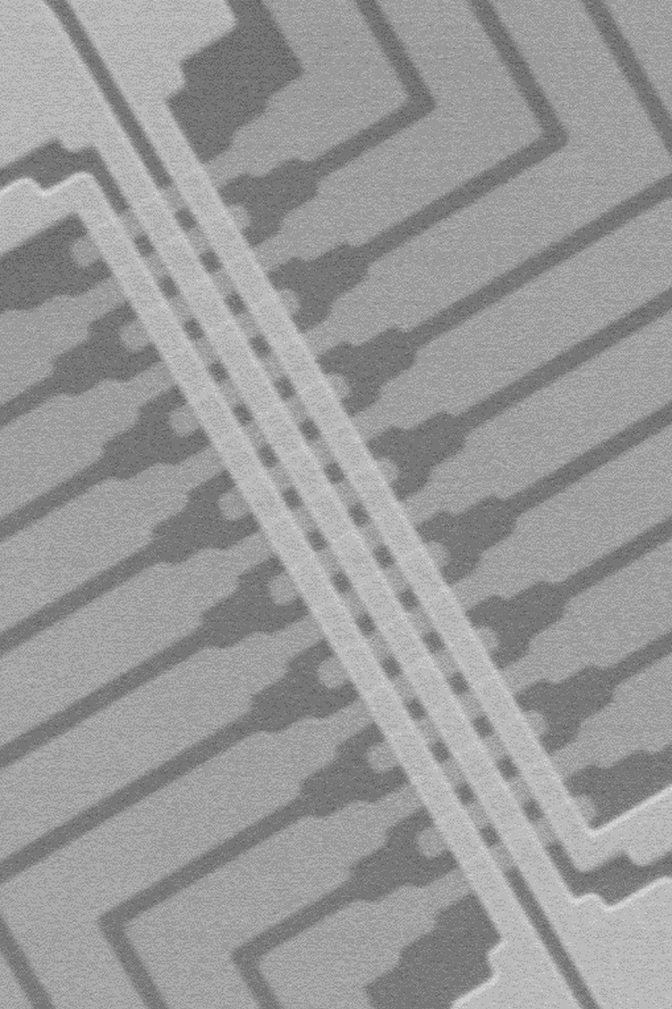

Memristors might be the answer. Named as a portmanteau of memory and resistor, they can be programmed to have different resistance states—meaning they store information as resistance levels. These circuit elements enable memory and processing in the same device, cutting out the data transfer bottleneck experienced by conventional computers in which the memory is separate from the processor.

However, unlike ordinary bits, which are 1 or 0, memristors can have resistances that are on a continuum. Some applications, such as computing that mimics the brain (neuromorphic), take advantage of the analog nature of memristors. But for ordinary computing, trying to differentiate among small variations in the current passing through a memristor device is not precise enough for numerical calculations.

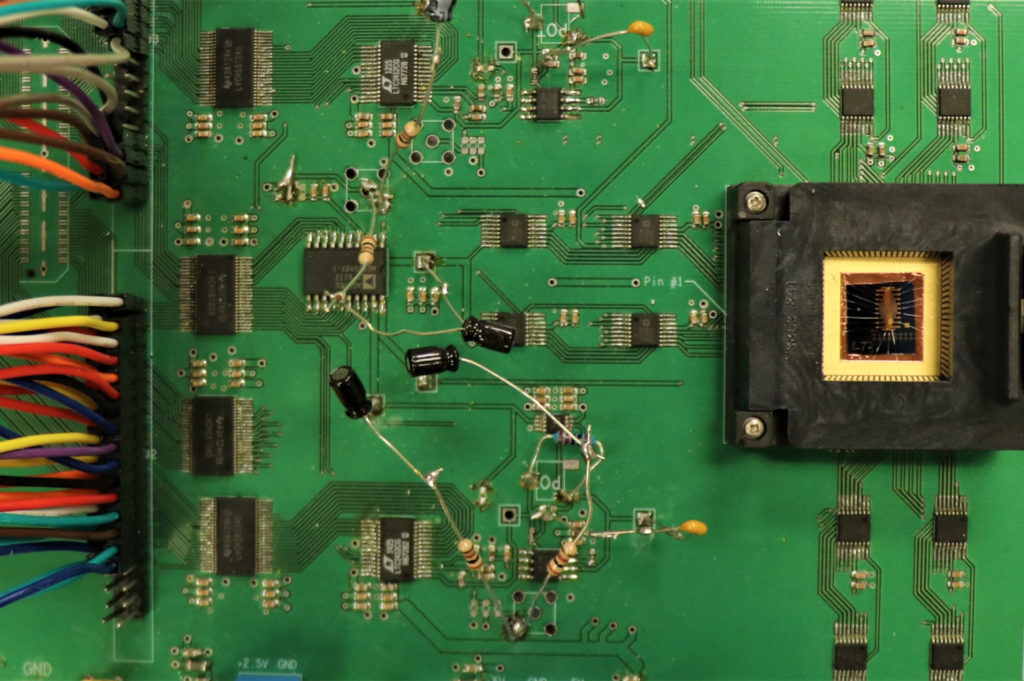

Lu and his colleagues got around this problem by digitizing the current outputs—defining current ranges as specific bit values (i.e. 0 or 1). The team was also able to map large mathematical problems into smaller blocks within the array, improving the efficiency and flexibility of the system.

Computers with these new blocks, which the researchers call “memory-processing units” (MPUs), could be particularly useful for implementing machine learning and artificial intelligence algorithms. They are also well suited to tasks that are based on matrix operations, such as simulations used for weather prediction. The simplest mathematical matrices, akin to tables with rows and columns of numbers, can map directly onto the grid of memristors.

Once the memristors are set to represent the numbers, operations that multiply and sum the rows and columns can be taken care of simultaneously, with a set of voltage pulses along the rows. The current measured at the end of each column contains the answers. A typical processor, in contrast, would have to read the value from each cell of the matrix, perform multiplication, and then sum up each column in series.

“We get the multiplication and addition in one step. It’s taken care of through physical laws. We don’t need to manually multiply and sum in a processor,” said Lu.

His team chose to solve partial differential equations as a test for a 32×32 memristor array— which Lu imagines as just one block of a future system. These equations, including those behind weather forecasting, underpin many problems science and engineering but are very challenging to solve. The difficulty comes from the complicated forms and multiple variables needed to model physical phenomena.

When solving partial differential equations exactly is impossible, solving them approximately can require supercomputers. These problems often involve very large matrices of data, so the memory-processor communication bottleneck is neatly solved with a memristor array. The equations Lu’s team used in their demonstration simulated a plasma reactor, such as those used for integrated circuit fabrication.

This work is described in a paper titled, “A general memristor-based partial differential equation solver,” published in the journal Nature Electronics.

It was supported by the Defense Advanced Research Projects Agency (DARPA) (grant no. HR0011-17-2-0018) and by the National Science Foundation (NSF) (grant no. CCF-1617315).