Designing intelligence

Can we create machines who learn like we do?

Can we create machines who learn like we do?

We’re summoning the demon. That’s what Elon Musk, serial entrepreneur on a cosmic scale, said about AI research to an audience at MIT last October.

“I think we should be very careful about artificial intelligence. If I had to guess at what our biggest existential threat is, it’s probably that. … In all those stories where there’s the guy with the pentagram and the holy water, it’s like, he’s sure he can control the demon.

“Doesn’t work out.”

The British astrophysicist and brief historian of time Stephen Hawking broadly agrees. In December, he told the BBC, “The development of full artificial intelligence could spell the end of the human race.”

Wow. That’s heavy. But this isn’t the first time that AI has seen a lot of hype. And last time, it wasn’t the human race that disappeared.

“Artificial intelligence has gone through different stages,” said Wei Lu, an associate professor of electrical engineering and computer science at U-M. “It has died twice already!”

AI’s deaths – or its winters, as insiders more generally refer to the field’s downturns – are characterized by disillusionment, criticism and funding cuts following periods of excitement, money and high expectations. The first full-scale funeral, around 1974, was caused in part by a scathing report on the field as well as drastically reduced funding from what is now the Defense Advanced Research Projects Agency (DARPA). The second, around 1987, also involved heavy cuts from DARPA.

But hey, it’s now summertime for AI, and there is serious money at play. Google snapped up the company DeepMind for a reported $400 million and Facebook has started its own AI lab. Ray Kurzweil, futurist and AI don for Google, said machines could match human intelligence by 2029.

Technology certainly seems smarter than it was a decade ago. Most smartphones can now listen and talk. Computers are getting much better at interpreting images and video. Facebook, for instance, can recognize your face if you’re tagged in enough photos. These advances are largely thanks to machine learning, the technique of writing algorithms that can be “trained” to recognize images or sounds by analyzing many examples (see The Perception Problem sidebar with this story).

In spite of the AI optimism (to the point of existential pessimism), the field might best be described as a hot mess. Hot because it’s a whirlwind of activity – you’ve got self-driving cars and virtual assistants. You’ve got artificial neural networks parsing images, audio and video. Computer giants are starting to make special chips to run the artificial neural networks.

But the challenge of organizing these pieces into an intelligent system has taken a back seat to the development of the new techniques. That’s the mess part.

John Laird, the John L. Tishman Professor of Engineering at U-M, is one of those trying to bring human-like intelligence back to the fore in AI. Current AI systems are great at the tasks for which they have been programmed, but they are missing our flexibility. He cites IBM’s Jeopardy-winning Watson. Drawing on an extensive stockpile of knowledge, it’s tops for answering questions (or questioning answers, if you prefer).

“But you can’t teach it Tic Tac Toe,” Laird pointed out. (Some solace for human champion Ken Jennings.)

Humans can learn how to do new things in a variety of ways – through conversations, demonstrations and reading, to name a few. No one has to go in and hand-code our neurons. But how do you get computers to learn like that? Researchers come at it in a variety of ways.

Lu’s pioneering work with a relatively new electrical component feeds into a bottom-up strategy, with circuits that can emulate the electrical activity of our neurons and synapses. Build a brain, and the mind will follow. Laird, on the other hand, is going straight for the mind. He is a leader in cognitive architectures – systems inspired by psychology, with memories and processing behaviors designed to mirror the functional aspects of how humans learn.

These aren’t the only approaches (after all, who said the human brain was the ideal model for intelligence?), but these opposing philosophies represent a central question in the development of AI: if it is going to live up to its reputation, how brain-like does AI have to be?

Let’s start at the bottom. Much is known about the brain’s connections at the cellular level. Each neuron gathers electrical signals from those to which it is connected. When the total incoming current is high enough, it sends out an electrical pulse. That pulse is the neuron firing, also called spiking.

When a neuron fires, it provides input to the neurons connected to its outgoing “wires.” In terms of computers, that’s a processing behavior – the neurons filter their incoming signals and decide when to send one out. But the connections between the neurons, called synapses, change depending on the pulses that came before. Some pathways get stronger while others get weaker. And that, in computer terms, is memory.

Simple enough, right? Except the best estimates for the number of neurons in an adult human brain are in the tens of billions, with thousands of synapses per neuron.

Yet there are people trying to simulate that in all its complicated glory. They call themselves the Human Brain Project. Led by the Swiss Federal Institute of Technology in Lausanne, Switzerland, the group claims that it will model the brain down to its chemistry on a supercomputer. Europe’s scientific funding agency made a $1.3 billion bet on them in 2013.

Last summer, less than a year into the project, 500 neuroscientists around Europe condemned the cause as a waste of money and time. They say neuroscience doesn’t yet have the background knowledge for an accurate simulation.

“The question is where will the function come from?” said Lu. “Neuroscientists still don’t understand that, so there’s no guarantee that putting a system together with a large number of neurons and synapses, regulated by chemistry at the molecular level, actually will be very intelligent.”

Neuroscientists who aren’t boycotting the project will perform experiments designed to discover more details of how the brain functions. But you don’t need a billion simulated neurons to begin exploring how humans think using what neuroscience does know.

In 2012, Chris Eliasmith of the University of Waterloo in Ontario, Canada, made the news with a million-neuron simulation of a brain. It won’t be taking over the world any time soon, but it can recognize handwritten numbers, remember sequences, and make predictions about numerical patterns.

The virtual brain, called Spaun, can turn a handwritten image into the idea of the number, explained Eliasmith. “Spaun knows that it’s a number 2 and that 2 comes before 3 and after 1. All that kind of conceptual information is brought online,” he said.

Biological systems are very efficient dealing with very complex tasks in very complex environments because our hardware is built very differently.”

Wei Lu, associate professor of electrical engineering and computer science

Spaun can then use that idea to answer questions, some of which you might find on an IQ test. For instance, in one of the challenges highlighted in the team’s videos, Spaun is presented with 4:44:444, 5:55:?

“Spaun figures out the pattern in the digits – an abstract pattern that only humans can recognize,” said Eliasmith.

It responds 555 by drawing three 5s with a virtual arm, translating the idea into a sequence of motions.

OK, so that might not be the most impressive thing you ever saw a computer do, but what distinguishes Spaun is how it operates. Spaun is still the largest working brain simulation, and Eliasmith and his team are continuing to expand its abilities.

“Our real goal here is not to take a whole mess of neurons and see what happens. It’s to take neurons that are organized in a brain-like way and see how those neurons might control behavior,” said Eliasmith.

The neurons were divided among 21 individual networks representing different parts of the brain, and these networks were linked up to reflect how the equivalent sections of the brain are connected. These include the different levels of visual processing in the brain, portions that turn an image of a number into the idea of a number, parts that manipulate that idea and areas responsible for moving the virtual arm.

Intriguingly, Spaun is human-like in the way it makes mistakes. For instance, when recalling a long sequence of numbers, Spaun remembers the first and last numbers best, and gets a little fuzzy with those in the middle.

This ability to use what was learned in the past to perform better in the future is a hallmark of biological cognitive systems.”

Chris Eliasmith, University of Waterloo

One of Spaun’s upcoming features is a hippocampus, which Eliasmith describes as the part of the brain that records our experiences and extracts facts from them.

The team has also improved learning in the spiking neural networks, creating a two-tier system. While the higher tier is focused on learning the goal, the lower tier is concerned with the steps needed to reach that goal. The hierarchy makes it possible to apply previously learned skills to new tasks.

To test the system, the team first had it learn how to move an avatar to a target location in a virtual room. They used reinforcement learning, giving the avatar positive points when it reached the target and taking away a fraction of a point for every step it took to get there. The avatar doesn’t just want to win by reaching the target – it wants the max score. Then, with the same rules in place, the network learned to pick up an item from one spot and deliver it to another.

When the researchers made the system start cold with the delivery task, it only managed 76 percent of the possible points. But if the system first learned the simpler task of how to reach a location, it scored 96 percent, meaning it chose more efficient paths.

“This ability to use what was learned in the past to perform better in the future is a hallmark of biological cognitive systems,” said Eliasmith.

After Eliasmith’s group has finished developing these and other abilities, it will integrate them into Spaun. Part of the reason why the team first develops smaller models is because Spaun runs very slowly on the computers available to the team. It takes 2.5 hours of processing time to get 1 second of the full simulation – and that’s before the upgrades. But there may soon be a better way.

“Biological systems are very efficient dealing with very complex tasks in very complex environments because our hardware is built very differently,” said Lu.

Unlike conventional computers, with separate memory and processing, neural networks serve as both memory and processor. And since processing can occur in localized sections throughout, brains are also much better at multitasking. Traditional computers have to do most of that multitasking in a sequence, so it takes ages. Spaun would do much better running on hardware that works more like a neural network does.

Many consider artificial neural networks implemented on chips to be the next leap in computing, allowing faster processing with lower power needs for tasks like handling images, audio and video.

“Hardware is facing bottlenecks because we can’t keep making devices faster and faster,” said Lu. “This has forced people to reexamine neuromorphic approaches.”

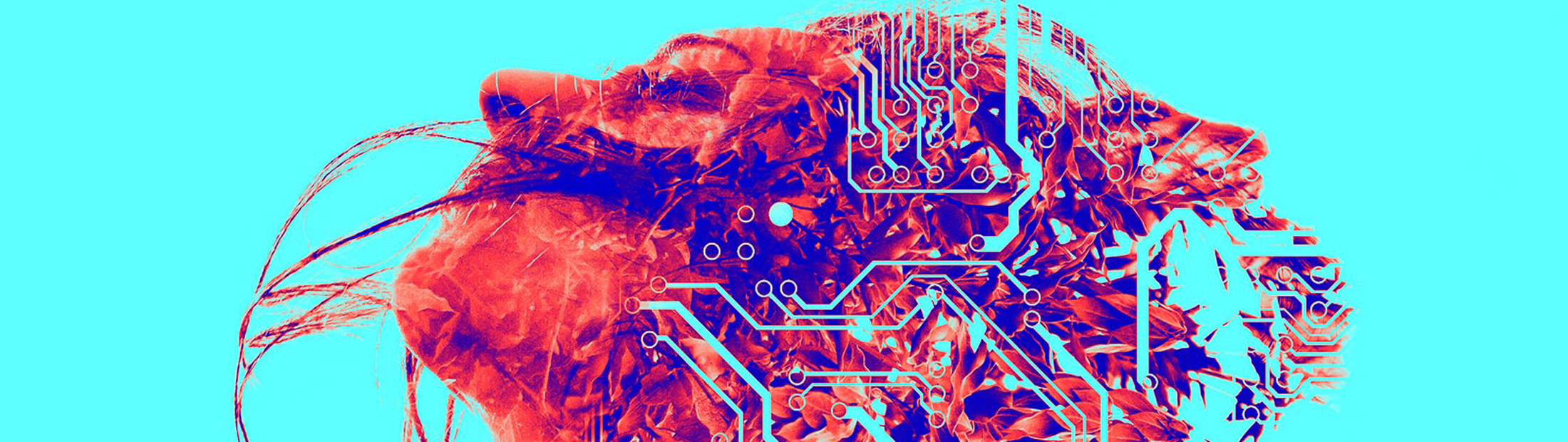

Qualcomm and IBM are right on this with the new chips Zeroth and True North. These chips are hardwired with versions of neurons and synapses in traditional computing parts, but a relatively new electronic component enables a more direct parallel with biology.

Memristors, only around since 2008, can play the role of the synapse. Like a synapse, a memristor modulates how easily an electrical current can pass depending on the current that came before. The memristor naturally allows the current flow between the wires to vary on a continuum. In contrast, the transistors in traditional processors either allow current to pass or they don’t. The different levels of resistance enable memristors to store more information in each connection.

The neuromorphic networks made by Lu’s group don’t look much like the wild web of cells in a biological neural network. Instead, the team produces orderly layers of parallel wires, with each layer running perpendicular to its neighbors. Memristors sit at the crossing points, linking the wires. The neurons are at the edges of the grid, communicating with one another through the wires and memristors. The circuits can be designed so that, like their biological counterparts, the electronic neurons only send out an electrical pulse after reaching a certain level of current input.

This hardware isn’t ready to scale to brainy proportions, in part because neuroscience doesn’t yet offer a clear picture of how to structure a brain. But it’s not too soon for the arrays to begin proving themselves capable of behaving like neural networks. Lu and his team have recently shown that crossbar memristor arrays can do the work of a virtual neural network, breaking down images into their features. This is a first step toward image recognition in a memristor network.

But of course, you don’t have to build AI from the neurons up. Researchers like Laird try to identify the brain functions that enable learning and other intelligent behavior and then recreate them in software. Laird has been developing the cognitive architecture Soar since the early 1980s. It is loosely based on the way that psychology breaks down our memories.

Soar has three types of memory – procedural, episodic and semantic. Semantic memory is a repository for facts, such as knowing that the capital of China is Beijing. Episodic memory is the memory of past experiences – what did you have for breakfast, or what did you do last time you had to solve a problem like this? And procedural memory is your internal how-to guide – it contains your skills.

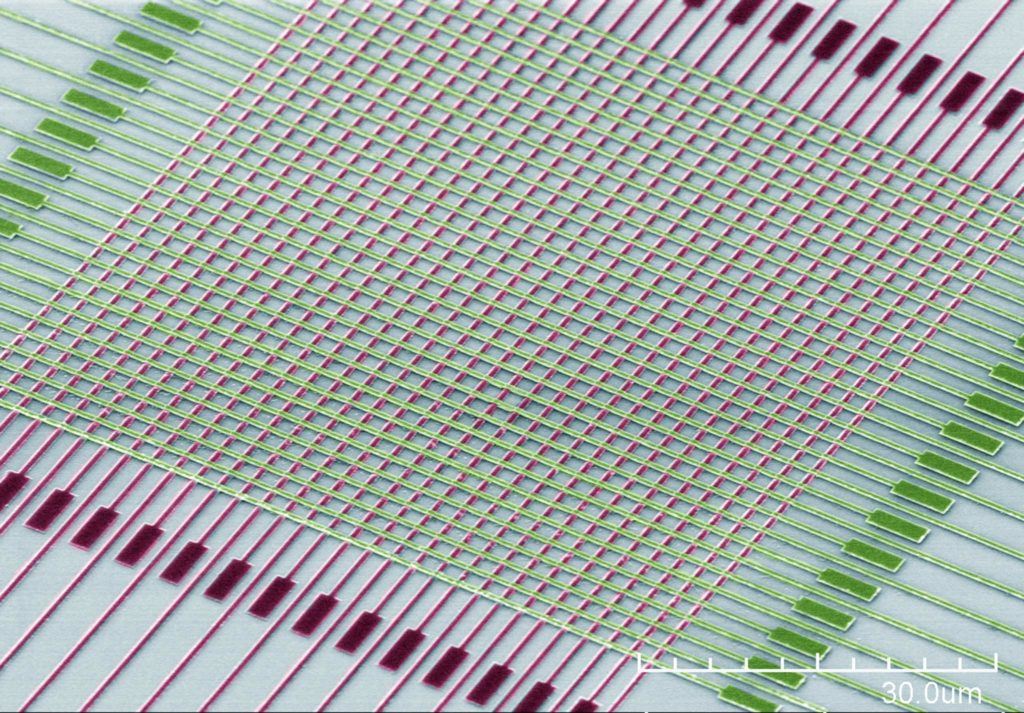

Soar is the mind behind the robot in Laird’s lab, called Rosie after the Jetsons’ automaton maid. This Rosie can’t clean house, but she can learn how to play games and puzzles from a human instructor.

“We start with games because there is a good definition of what the task is, and by playing against people, you can tell how good your system is,” said Laird.

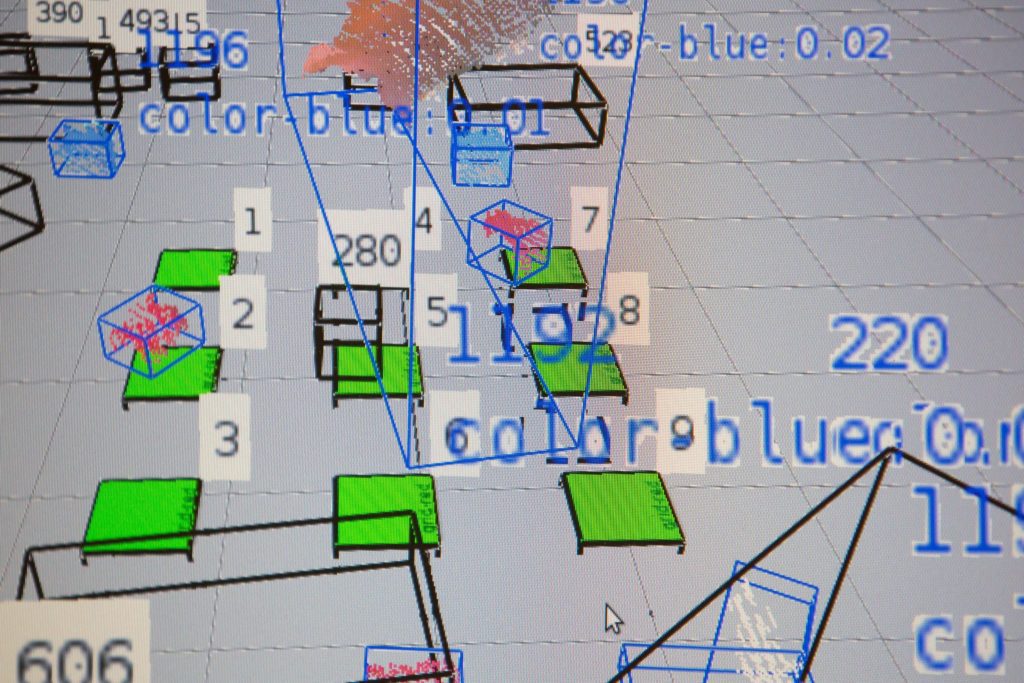

Rosie’s robotic arm comes out of the middle of a table, long white lattices with black motors for joints and a claw at the end for grasping blocks. She sees the table through a Microsoft Kinect camera, which gives her 3D vision. Laird’s graduate students equipped her with language-interpreting software that allows them to speak to Rosie.

James Kirk (yes, that’s his real name) demonstrated a game of Tic Tac Toe against Rosie, starting by teaching her. He told her that the playing pieces are blue and red – and that the goal is to get three pieces in a row. During the game, Rosie made a schoolboy error, focusing on getting two in a row when she should have stopped Kirk’s nearly complete run.

Kirk explained that Rosie is only looking at her current move – not predicting his next move, so he took it easy on the machine. “I blocked one of its wins, but it should be able to find the other one,” he said.

Rosie got her three in a row. She would have learned more if she lost, but maybe he didn’t want to embarrass her.

Rosie doesn’t like to lose. She won’t throw blocks or anything, but her own reinforcement learning system helps her to recognize mistakes and avoid repeating them.

Rosie is still at the stage of learning procedures, but Soar is capable of improving game play as well. For instance, this game in Rosie’s episodic memory would cause her to repeat the mistake for a while. Yet Soar is robust enough that after a few games with less sympathetic rivals, it could begin to understand that not blocking an opponent’s two-in-a-row results in a loss, even if it wasn’t explicitly looking an extra move ahead. View more videos of Rosie.

Soar can also think in images. It could compare the Tic Tac Toe board at a particular point in the game to boards from previous games, rotating or taking the mirror image of the board to see whether it was an equivalent situation at a different angle, for example.

In Tic Tac Toe, Rosie is given the skeleton of a procedure, and she has to fill in the gaps. A puzzle is a different matter. Given a set of rules, Rosie must deduce the procedure. And she does. Rosie doesn’t freeze up in an unfamiliar situation. She figures out her possible actions, assigns each action a weight for how likely it is to bring her closer to her goal, and then generates a random number to decide what to do.

She might have two available moves, let’s say options A and B. She is 80 percent sure that option A is right, but she thinks there’s a 20 percent chance that B might be best. If her random number generator produces a number between 1 and 80, she chooses A, but if it’s 81-100, she goes with B. Sometimes choosing an alternative action will result in a mistake, but other times, it allows Rosie to try a counterintuitive move.

The episodic memory is important to Rosie’s ability to learn a procedure. Yet Soar didn’t have that capability until the 2008 release. Watching the 2000 movie Memento, about a man who cannot make long-term memories, Laird realized how crippling it is for AI systems to lack a way to store their experiences. So episodic memory was a priority for that upgrade, beginning in the mid-2000s.

Episodic memory poses its own problems in terms of speed, though. The more memories Rosie has to sift through, the longer it takes her to make a decision. But like Spaun, Soar could work a lot faster if it weren’t running on a traditional computing system.

In 2012, Braden Phillips of the University of Adelaide in Australia got in touch with Laird, offering to build a computer that could run the Soar software efficiently. Phillips is a senior lecturer in electrical and electronic engineering who specializes in computer electronics, and he is interested in making a computer that thinks like a human.

Cognitive architectures like Soar are particularly appealing because – unlike neural networks – they already resemble computer architectures. They divide the mind into memories and processing units, like a computer’s drives, RAM and processors. But that is where the similarity ends.

“When we dug a bit deeper we found that the language used by many cognitive architectures to express behavior is radically different from ordinary programming languages,” said Phillips.

In order to efficiently decide what to do next, Soar uses a form of parallel processing. It compares its working memory – what it’s “thinking” and “perceiving” at any moment – to its procedural memory. Procedural rules that match the current circumstances tell Soar what to do next.

“But the way a PC works, the memories are separate from the part that does all the computation. In order to find a memory that’s relevant to the current situation, you have to bring the memory into the processor to make comparisons,” said Laird.

The computer repeats that action until it has checked all of its memories. It would be a lot faster if Soar could compare its working memory to all the memories simultaneously. And that is what Phillips’ Street Engine will do. It isn’t neuromorphic computing – there are no neurons or synapses. Instead, it is made up of many small memories with processing built in. It runs a search looking for rules that match the state of the working memory.

“Complex behavior can emerge when an AI agent acts according to the combination of many of these rules applied in parallel,” said Phillips.

While chips designed to run neural networks and the Street Engine are different approaches to computing, they have a lot in common. The key similarity is that both can do processing directly in the memory. In fact, Phillips says that memristors are an option for building the Street Engine, too.

The road between Rosie – or Spaun, for that matter – and true AI is long. But with the emphasis on learning, Laird and Eliasmith are taking significant steps toward recreating a human’s mental agility. Meanwhile, Lu and Phillips are developing hardware that may one day allow an intelligent agent to think in real time.

So why the kerfuffle over the perils of AI now? Well, we don’t know if, once created, AI will be able to surpass human intelligence.

Being smart does not mean you are evil.”

John Laird, John L. Tishman Professor of Engineering

One theory of the brain, popularized in part by Kurzweil, holds that the neocortex – the thinking part of the brain – is just a few hundred million pattern recognizers that we train over a lifetime. These pattern recognizers are made of 100 or so closely interconnected neurons. We recognize objects and sounds by the combination of pattern recognizers that fire as a group. (The columns can overlap one another, which some say works against the theory, and the numbers are pretty loose. But just go with it for a moment.)

What if a set of pattern recognizers could be built of searchable memories? Could intelligence emerge from something like a 300-million-memory Street Engine? Phillips thinks so, but the trick will be connecting those memories in the right way. And if that could work, how much smarter than us would a billion-memory Street Engine be, or an equivalent virtual brain? Would it be the demon that annihilates mankind?

“One point I like to make is that being smart does not mean you are evil,” said Laird. “If it does, it is somewhat ironic to have Musk and Hawking worry about evil machines, being two of the smartest humans around.

“Even so, ensuring that machine intelligence works for the good of humanity needs to be one of our primary goals.”

While the core challenge of artificial intelligence (AI) is making machines that think, giving those machines sight and hearing is a major part of that endeavor. Developments in this area have leapt forward with a technique called machine learning.

For instance, machine learning algorithms can “learn” to recognize human faces by analyzing many portraits. Old school machine learning required developers to program in the important features or mark them up in the training images. The new wave, called deep learning, lets them skip that step. The algorithm can decide for itself what to pay attention to.

“Deep learning is an enabling technique for building artificial intelligence systems. In a narrow sense, it performs already very well on some AI tasks, such as speech recognition and image recognition,” said Honglak Lee, an assistant professor of electrical engineering and computer science (EECS) at U-M and a trailblazer in the field of deep learning.

These algorithms are typically constructed of many pattern-recognizing layers. The first layers look at the raw data. Analyzing images of faces, for instance, many break the pictures down into 10×10 pixel squares. In these chunks, the algorithm identifies edges and other components of patterns that are repeated among the different example images.

Armed with these features, the next layers examine how the features relate to one another (for example, the eye usually has an eyebrow above it). These relations build until, at the top level of layers, the algorithm recognizes faces.

Deep learning is also useful for audio and video analysis. While image recognition looks at patterns in space, audio recognition is concerned with those over time. To interpret video, the algorithm must learn spatial and temporal patterns.

The ability to vastly improve computer perception through deep learning has already been used in the classic artificial intelligence problem of game playing. Lee worked with U-M professors Satinder Singh (EECS) and Rick Lewis (Psychology), experts in reinforcement learning, and others at U-M to develop one of the best AI Atari players. This work, presented at a conference last year, got a shout-out in the recent issue of Nature that featured Google DeepMind’s advances in AI Atari play.

The game-playing agent used reinforcement learning to hone its strategy in games like Q*Bert, Space Invaders and Sea Quest. Deep learning allowed the agent to interpret the screen views in real time.

Lee plans to add another level of sophistication. “Potentially deep learning can make a good prediction about the video or temporal data, including games,” he said. “It can be viewed as simulating the future on a short scale, based on the action that the agent takes.”

He is also interested in improving the flexibility of deep learning algorithms so that they can handle variations such as lighting, point of view and facial expression “It allows for much richer reasoning abilities and robust recognition abilities,” said Lee.