Autonomy from the inside out

Questionable maneuvers by at least three human drivers have put a driverless robotaxi into a tough spot on a narrow San Francisco street. The taxi’s lidar, cameras and radar detect two delivery vans parked in the oncoming lane and a tow truck approaching head-on to get around them. Meanwhile, there’s another car in its rear view.

Contents:

The scene is unfolding in a video that Waymo co-CEO and Michigan Engineering computer science alum Dmitri Dolgov (Ph.D. CSE ’08) shared in a 2024 talk at the Bob and Betty Beyster Building on North Campus.

“You have to reason what the truck is doing, and also what the car behind you might do,” Dolgov told the audience. “It’s a very interesting multi-agent dynamic problem.”

The Waymo safely backs itself up until the tow truck has a clear path, then drives ahead. The situation is over in 14 seconds. But during each and every one of those seconds, beneath the SUV’s aluminum body, its circuitry routed hundreds of millions of sensor readings, its onboard computing platform performed hundreds of trillions of calculations to process the data, and its AI model made decisions in real time, with no human driver on board.

Automated vehicles (AVs) require immense computational power that grows exponentially with each new level of autonomy. Today’s AV technology—mostly in the form of driver support features like lane-keeping and emergency braking—is demonstrating significant safety benefits and increasing consumer demand. The most advanced technology on the road today is considered “high automation,” which the industry refers to as Level 4 autonomy. Found in vehicles like Waymo’s robotaxis, Level 4 is limited to pockets of urban areas, mostly with good weather. To fulfill the promise of Level 5—driverless vehicles that can freely navigate much more of the nation’s 3.5 million square miles—experts say we need a new generation of semiconductors.

“Cars have been seen as computers on wheels for a while, but to achieve full autonomy, they need to be more like traveling data centers,” said Valeria Bertacco, the Mary Lou Dorf Collegiate Professor of Computer Science and Engineering and leader of the Michigan Advanced Vision for Education and Research in Integrated Circuits, or MAVERIC, collaborative.

“To make that leap, the auto industry is going to need new materials, architectures, systems and manufacturing processes for chips that are faster, cheaper, lower-power and more durable.”

Why autonomy matters

Level 4 autonomous vehicles like Waymo’s represent a major technological leap that’s been decades in the making. Level 4, as defined by the Society of Automotive Engineers (SAE), requires the capability for self-driving in limited conditions, such as within a pre-mapped geographic area or along a set route. It’s the first tier of automation that never requires a human to take the wheel. Waymo is the world leader, logging more than 200,000 paid driverless rides per week in the Bay Area, Phoenix, Los Angeles and Austin. In consumer vehicles, most major automakers offer Level 2 advanced driver-assistance systems, or ADAS, with features like adaptive cruise control and lane-keeping assistance that add up to hands-free—not attention free—driving, mostly on pre-mapped highways and roads and under certain circumstances. About 60% of new cars sold in 2025 are equipped with at least some of these features.

The industry has come a long way since the hype of the 2010s, when tech giants and automakers promised full self-driving cars within a decade. Frenzied ambition evolved into careful, methodical progress. High-profile crashes forced some companies to recalibrate their approach, or their very existence. But years of study have shown conclusively that automation boosts safety.

“The pattern we see is the more automated a system is, the more effective it is. As you go from warning the driver something is going on in front of them, to taking control and just braking, you get improvements in crash reduction,” said Carol Flannagan, a research professor at the U-M Transportation Research Institute (UMTRI) who has worked with General Motors to study the effectiveness of its ADAS features since 2013.

For example, automatic emergency braking systems with cameras and radar cut rear-end collisions by 50%.

“That’s just extraordinary,” Flannagan said. “It’s off the charts in our world.”

More automation, fewer crashes

Click image to enlarge.

Source: UMTRI, General Motors

Further up the autonomy scale, Waymo’s robotaxis have been involved in 81% fewer injury-causing crashes than an average human driver on the same routes in San Francisco and Phoenix, the company’s peer-reviewed research has shown.

The technology is maturing at a critical time. While U.S. roadway deaths have come down since their peak in the 1970s, crashes still claim more than 40,000 lives a year. The United States Department of Transportation calls it a “crisis” and has established the National Roadway Safety Strategy to address it.

“Given all the safety features that have been added to vehicles over the years, it’s my view that the only thing that will significantly reduce the number of roadway fatalities at this point is automation,” said Henry Liu, director of UMTRI and the Bruce D. Greenshields Collegiate Professor of Engineering in civil and environmental engineering.

And unlocking autonomy everywhere in all conditions requires big innovation in the tiniest components—the transistors and chips at the vehicles’ core.

Computational constraints

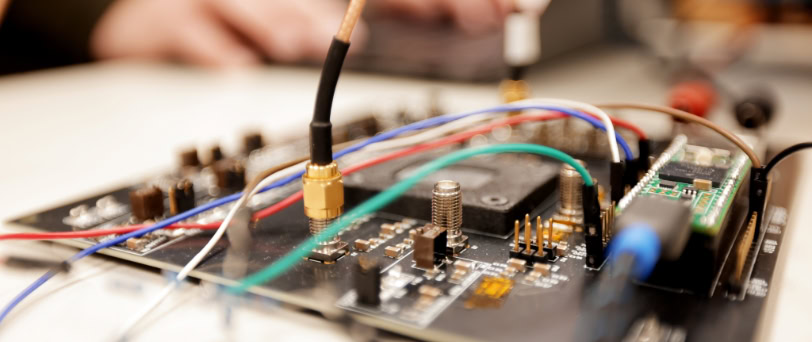

In today’s automated vehicles, a central computing platform processes the deluge of data that continuously flows in from the vehicle’s sensors. That can range from the eight high-resolution cameras on a Tesla Model 3 to the more than 30 cameras, lidar and radar aboard a Waymo robotaxi. The workhorse chips in those computing platforms are high-performance graphics processing units (GPUs) or newer tensor processing units (TPUs) optimized for handling huge datasets and running deep learning AI models. Even for powerful chips, it’s a big job, and running inside a moving vehicle adds complications.

“The amount of compute we need to do per task can be very high, and we’re limited in terms of how much silicon area we have and how much power we can draw. This is a constrained, embedded system,” said Reetuparna Das, associate professor of computer science and engineering. “The need for more efficient AI hardware is very well understood. It’s a billion-dollar market.”

Das sees a bottleneck on the horizon.

“With each new generation of AVs, the number and capabilities, such as the resolution, frame rate and field of view of sensors, will grow for increased safety and enhanced capabilities,” she said.

Even incremental improvements can result in exponential increases in the amount of data that needs processing. Tesla, for example, recently upgraded its front-facing camera from 1.2 to 5 megapixels, resulting in a more than five-fold increase in data output. A further upgrade to 8 megapixels would generate more than a terabyte of data every 10 minutes—from just one of the Tesla’s eight cameras. And when instantaneous driving decisions must be made, even the milliseconds it takes to get data from a camera to a central computer can hinder performance.

Other automated vehicles have more sensors, but rely less on high-res cameras. But the point remains; Michigan Engineering researchers say the technology that got the industry where it is today is unlikely to get it to full autonomy. They’re working on what’s next with global industry leaders and a $10 million grant from the state of Michigan to the Michigan Semiconductor Talent and Technology for Automotive Research (mstar) initiative. Michigan Engineering is a founding partner in mstar along with global semiconductor R&D hub imec, semiconductor equipment firm KLA, the Michigan Economic Development Corporation, Washtenaw Community College and General Motors.

At the heart of the challenge lies a two-fold problem. First, true autonomy requires sophisticated AI, which demands a tremendous amount of computational power. Second, that computation has to happen on the vehicle itself. It’s “edge computing,” without processing help from the cloud or big data centers.

When AI moves to the edge, it needs to be lean. Less energy consumed by each component of the system means more powerful computing on the same energy budget, which is crucial for vehicle range. Researchers are exploring new hardware designs that use far less energy and are easy enough to manufacture in the big quantities that automakers need.

Through mstar, researchers are reimagining AV computing systems. They’re borrowing tricks from the human brain to design chips and software that can handle data more efficiently. They’re refining lego-like hardware components that could enable automakers to tailor systems to their needs. And they’re investigating new materials to integrate with silicon for low-power, high-performance processors and memory chips.

A new way to model the brain

The deep learning network AI models in today’s automated vehicles loosely mimic the structure of a biological brain. Computational nodes stand in for the neurons in a human brain, and the synapses that connect neurons are represented by algorithmic weights. In our brains, synapses grow stronger or weaker through experience and learning. Similarly, AI models adjust their algorithmic weights over time through training.

AI “brains,” however, are woefully inefficient compared to their human counterparts. Our brains can pull off the equivalent of an exaflop’s worth of computation—a billion-billion operations every second—using just 20 watts of power. By comparison, one of the world’s most powerful supercomputers, Oak Ridge National Lab’s Frontier, hit the exaflop milestone in 2023, but it required 20 megawatts of electricity—enough to power tens of thousands of homes.

To help tomorrow’s AI work smarter, U-M researchers are developing a new approach that, rather than imitating the brain’s structure, takes cues from its optimized behavior.

Wei Lu, the James R. Mellor Professor of Engineering in mechanical engineering, explains that one of the behaviors that makes our brains so efficient is their ability to filter out unnecessary information. Instead of analyzing every object on the road independently, we form a general mental picture of the road ahead. Then, our eyes and brain work together to detect contrast, motion and sudden events in that otherwise static environment.

The artificial neural networks operating in today’s AVs, on the other hand, are flooded with continuous, detailed sensor feeds of video, lidar and radar point clouds. Everything gets through, and all that data requires a gargantuan amount of processing power. But does it need to? That’s the question being asked by Das and Lu. They’re testing a more efficient processor called a neuromorphic chip and a different type of algorithm called a spiking neural network. The technologies would combine with a neuromorphic sensor called an event camera, which registers change over time rather than constant complete data. Such a system could complement traditional cameras.

Michigan Engineering researchers say the technology that got the industry where it is today is unlikely to get it to full autonomy. They’re working on what’s next with global industry leaders and a $10 million grant from the state of Michigan.

“Neuromorphic sensors don’t capture frames like conventional cameras do. Instead, they detect change in each pixel independently,” Lu said. “The change signal accumulates over time and sends a voltage spike to the processor when a certain threshold of change is reached.

“Our neuromorphic chips can be tightly integrated with the neuromorphic sensors to efficiently extract such information.”

These systems operate much faster than conventional cameras—in microseconds rather than milliseconds.

He explains that this is similar to how our eyes and brain operate.

“Our retinas contain neurons that generate voltage spikes in response to contrast, motion and sudden events, and our visual cortex processes that information and generates the 3D colorful image we see,” Lu said.

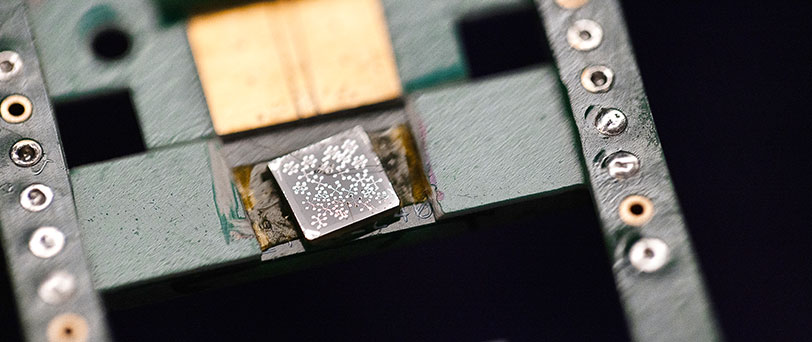

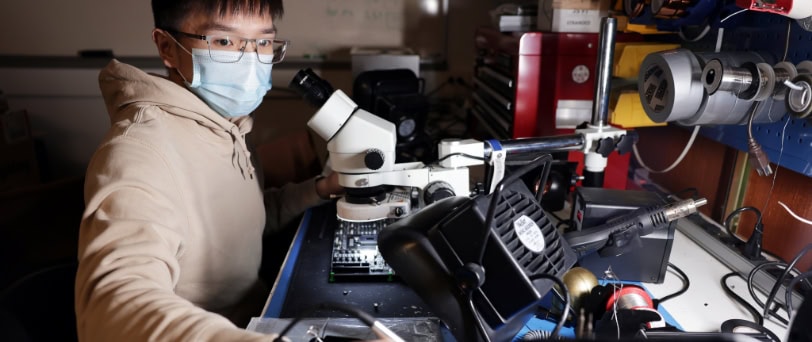

Their neuromorphic processor is based on a tungsten oxide memristor they’ve been advancing for at least a decade. Memristors merge memory and processing, taking the place of transistors to offer an entirely different computing paradigm that doesn’t rely on binary code.

In future driverless cars, a combination of neuromorphic event cameras and conventional cameras could interpret a vehicle’s environment and distance from objects in time and space a lot more accurately and faster than today’s systems, Lu said. This approach would also cost less because it wouldn’t require lidar.

“It works very well in situations where there’s typically no change in your video or no collisions, but suddenly something happens and you want to detect it,” Das said. “At the same time, with less data coming in, there’s less work that the central ADAS unit needs to do.”

Lego-like chiplet architectures

Another limiting factor in today’s AVs is the “system on a chip” architecture that underpins their computing systems. This approach prints all the necessary components of a computing system—such as the central processing units, GPUs, TPUs and memory—on a single piece of silicon. Also used in devices like smartphones and home appliances, system-on-a-chip has been the automotive industry standard for decades. But the computing power needed for AVs is pushing the architecture to its limit in terms of physical size and complexity.

Leveling up:

Autonomy and computational power

AI processing power, measured in Trillions of Operations per Second (TOPS), needs to scale exponentially as AVs climb the Society of Automotive Engineers’ vehicle automation ladder.

Click image to enlarge.

Level 2 is the highest level that’s widely available today; business data platform Statista predicts that about 60% of new vehicles sold in 2025 will have Level 2 features. Levels 3 and 4 are limited to a few manufacturers and applications, such as Waymo’s robotaxis. Level 5 vehicles are likely years or decades away from production.

Complete autonomy in all areas under all conditions. TOPS: 4,000+ (estimate)

Complete autonomy under certain conditions, such as in a pre-mapped area, as with local driverless taxis. TOPS: 320

Limited autonomy in certain conditions, like traffic jams. Driver must be ready to take control at all times. TOPS: 24

Driver support that includes steering AND speed management, such as lane centering AND adaptive cruise control. TOPS: 2

Limited driver support including steering OR speed management, such as lane centering OR adaptive cruise control. TOPS: N/A

Warnings and momentary assistance only, such as automatic emergency braking and blind spot or lane departure warnings. TOPS: N/A

SOURCE: SAE International, Global Semiconductor Alliance

It’s clear that innovation is needed, but Mike Flynn, the Fawwaz T. Ulaby Collegiate Professor of Electrical and Computer Engineering, points out that the incentive might not be there for traditional semiconductor firms.

“Maybe 10 million cars are sold every year in the U.S.,” Flynn said. “That volume might sound like a lot, but it isn’t high enough to justify spending a lot to develop a new chip design when you compare it to the number of new iPhones that are sold per year.”

What if auto companies could innovate on their own?

Researchers are pursuing “chiplets” that could make that possible. The chiplet approach breaks a computing system into smaller, modular components. Separate CPU, GPU, AI accelerator, memory block and input/output chiplets, for example, could be mixed and matched on a circuit board to build a computing system that’s better tailored to a particular need. Today, chiplets see limited use in high-performance computing systems, but their interconnectability and durability aren’t yet good enough for use on a vehicle.

Arm, the British semiconductor and software design company whose processors are ubiquitous in smartphones, is working to change that, collaborating with more than 50 industry partners to “encourage more plug-and-playability,” said John Kourentis, Arm’s director of automotive go-to-market, at mstar’s Automotive Chiplet Forum in Ann Arbor in 2024.

“Today we’re seeing single-vendor chiplet systems that are tightly coupled together,” Kourentis said. “The utopia is a multi-vendor marketplace where chiplets fit together like Lego bricks.”

This would make it possible for auto companies to join the effort. “But the chiplets have to talk to each other,” Flynn said.

He and Bertacco, who leads mstar at U-M, are working to solve one of the key challenges in bringing this technology to the auto sector.

“Present-day chiplet communication protocols weren’t developed with autos in mind,” Bertacco said. “They are not robust enough to operate for years in the rugged environment of a moving car.”

They’re developing a new standard and building a prototype system.

Even as transistors get smaller and smaller, it’s difficult to cram enough of them onto a chip to meet the growing needs of an AV. And as chips get larger and more complicated, they become more vulnerable to defects and failures, which are especially dangerous in cars and trucks. In addition, because they must be manufactured on advanced equipment by big chip makers, the ability to customize them is limited.

“Chiplets would end up having to do a lot of talking to each other in vehicles because they’re solving big problems,” Flynn said. “Not only does their communication need to be robust, it also needs to be efficient in terms of energy and bandwidth. We’re trying to make it high-speed and low-power too.”

Materials beyond silicon

One could argue that transportation was the very first field to harness the power of silicon. The Apollo Guidance Computer, one of the earliest silicon-based computers, helped humans go to space in 1969. Each of its individual chips was about the size of a sticky note and held six transistors.

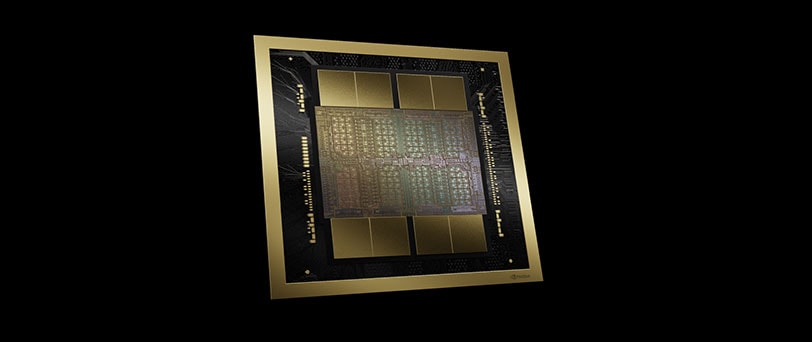

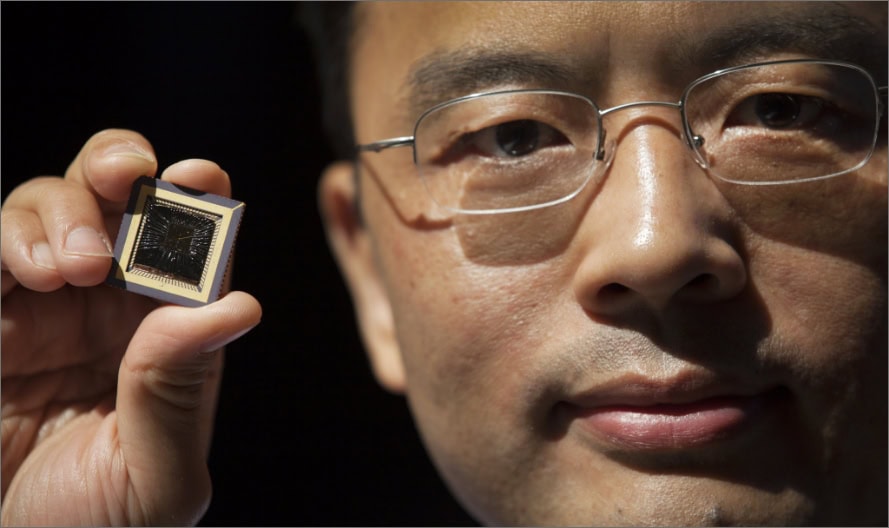

Around that time, Moore’s law first predicted that the number of transistors that could be packed onto a chip would double every two years, and that has more or less held true ever since. Nvidia’s Orin platform, one of the most advanced chips used in today’s AVs, holds 17 billion transistors on a thumbprint-size slice of silicon. And the company’s next chip, Thor, will cram 208 billion transistors into two connected side-by-side slices.

“This scaling process allows the electrons to have much shorter paths, so they can travel a lot more quickly and we can drive more electrons per unit time,” said Becky Peterson, associate professor of electrical and computer engineering and director of the U-M Lurie Nanofabrication Facility. “That’s how we have seen the exponential growth in computing power in our lifetimes.”

But the ever-shrinking transistors that have enabled ever more powerful computers are reaching their physical size limits. In addition, chips with more and more transistors require more and more power. Their hunger for electricity is causing growing concern in the AI industry, and it’s even more problematic in a power-constrained automotive environment.

“You could probably get at least 1,000 times the energy performance from the materials that we investigate and design”

In short, if we want computers powerful and energy-efficient enough to drive the AVs of the future, the industry needs to move beyond tiny transistors on silicon chips. And U-M researchers are doing just that. They’re working on magnetoelectric materials that take advantage of quantum effects to store and process data in new ways, using less power. They’re also developing amorphous and atom-thin compounds that could piggyback on top of conventional silicon to add a second layer of transistors.

Car country plugs in

The Midwest holds the greatest automotive workforce the world has ever known. With help from U-M’s new EV Center, it’s gearing up to power an electric future.

More stories from

The Michigan Engineer magazine

John Heron, an associate professor of materials science and engineering, is one of those researchers. His focus is on a special class of materials with unique—and linked—magnetic and electronic properties that can be harnessed for big energy savings.

Their electrons interact with each other more readily than they do in today’s materials that rely on single-electron behavior.

“We design materials where the single electron physics breaks down and instead the electrons interact very strongly,” Heron said. “So when you do something to interact with an electron, you actually interact with the whole collective of coupled electrons and you can reach new energy efficiency paradigms.”

A second advantage is that their electronic and magnetic properties are tied together, enabling an electric voltage to flip an electron’s magnetic orientations—equivalent to whether its north pole points up or down, for example. Because a magnet has poles intrinsically, these orientations or states are non-volatile, meaning they’re retained even when the power is off. In this way, they naturally integrate computation and memory into the same device, saving the energy typically required to transport data throughout the computer.

“You could probably get at least 1,000 times the energy performance from the materials that we investigate and design,” Heron said.

Heron plans to design a prototype and develop strategies to integrate the new materials with silicon-based electronics.

From present to future: Bridging the gap

New materials will play a critical role in tomorrow’s AV chips. But for now, it’s still important that innovations are compatible with silicon and current manufacturing infrastructure.

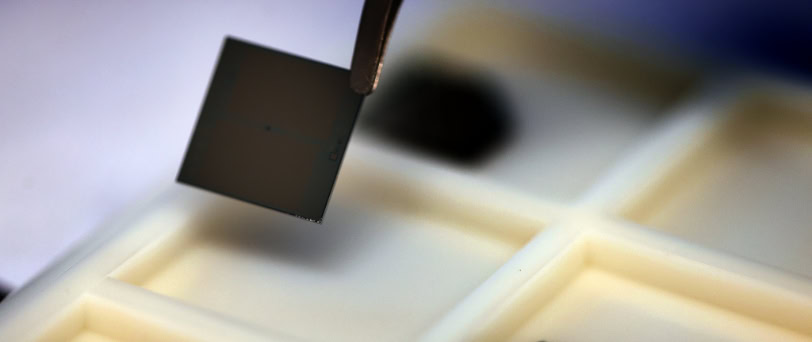

Peterson, who directs U-M’s Lurie Nanofabrication Facility, is a member of a team that’s working to bridge that gap by stacking ultra-thin materials on top of silicon on chips. The technique could pack an additional layer of transistors into nearly the same amount of space.

“What we want to do is manufacture multiple layers of transistors on the same chip,” Peterson said. “And that’s hard to do.”

Employing a custom atomic layer deposition process, Peterson will be working to add transistors made with an ultra-thin semiconductor layer such as zinc tin oxide on top of the first layer of silicon transistors to achieve very low power, high performance processors and memory.

Unlike silicon, zinc tin oxide is not crystalline and does not have a repeating molecular structure. It’s amorphous, or unstructured, more like glass.

If we want computers powerful and energy-efficient enough to drive the AVs of the future, the industry needs to move beyond tiny transistors on silicon chips. And U-M researchers are doing just that.

“The atoms can be in these somewhat random positions, and we still get good quality semiconductor films,” Peterson said. “We’re designing it so that places like Intel or TSMC or GlobalFoundries can build their chips and then put another layer of transistors on top. And we can use those to do more computation or to build in more energy-efficient memories or to handle power that the bottom layer can’t handle.”

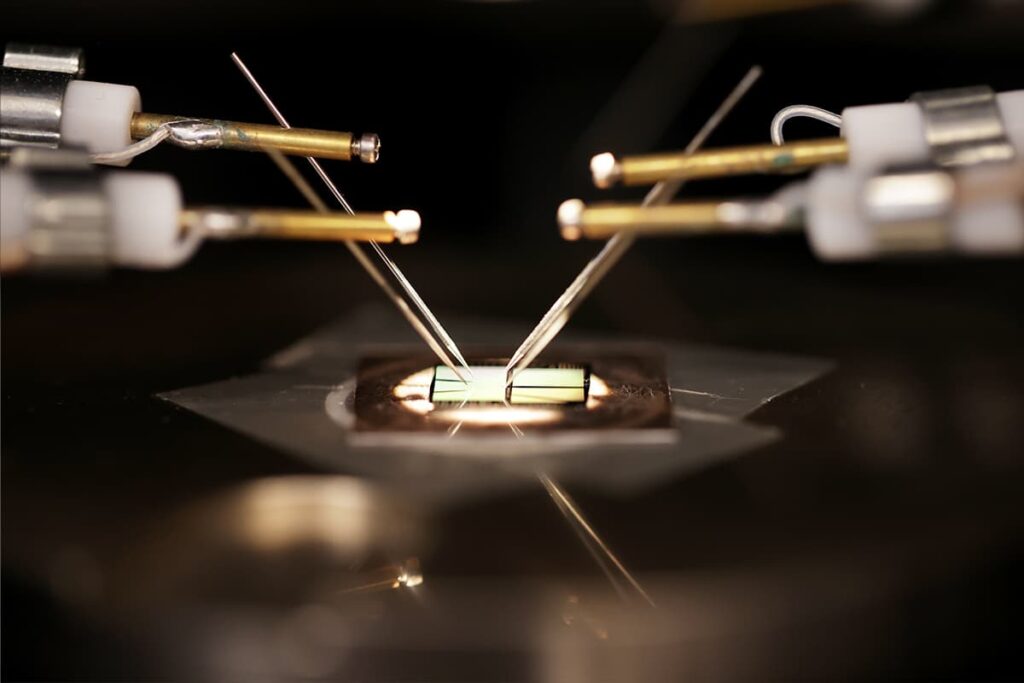

Beyond ultrathin, Ageeth Bol, professor of chemistry and materials science and engineering, is working with slivers of materials so slim they’re referred to as 2D.

“With silicon, when you go much smaller than a few atoms thick, the material starts to become leaky from an electricity standpoint, and the device doesn’t perform well,” Bol said.

Molybdenum disulfide, on the other hand, has been shown to be a capable semiconductor at 2D thicknesses, meaning it could stack onto silicon or onto layers of itself. Bol’s goal is to improve upon current manufacturing practices, which today are suited more to the lab than the factory.

“The current state of the art is basically based on exfoliating bulk crystals,” she said. “So you take a big, bulky chunk of crystal, and then you put sticky tape on it and you pull it off. Then you stamp that on a piece of silicon wafer, and hope that you find a little sliver that’s one atom thick.”

Bol will test ways to grow the material with a plasma-enhanced approach, with the goal of identifying the sweet spot that accelerates the process without damaging the delicate material. Finally, she’ll figure out how to integrate it with silicon.

From the lab to the street

The robotaxis that navigate San Francisco with advanced sensor suites and edge AI wouldn’t exist without the specialized semiconductors that came first. Breakthroughs that began in the early 2000s led to GPUs that could apply their immense parallel processing capabilities to tasks beyond rendering images, as well as other AI accelerators that can guide deluges of data through the extensive, immediate computation deep learning requires.

“Level 4 is an enormous, incredibly hard step. Waymo has done a great job of engineering to get us there so far,” said Greg Stevens, research director at Mcity. “But nobody knows how good is good enough or how many advances in AI and compute we’ll need to eventually scale up to Level 5.”

Last year, semiconductor industry representatives toured Mcity and looked to the horizon.

“It’s great to see people coming together to address these aspects,” Michael Sun, who leads the automotive business development unit at Taiwan-based TSMC, said on the tour. “I’ve been working in this area for a long time, and I think there’s a lot of momentum right now. Semiconductors are cool again.”