Cracking the cochlea: U-M team creates mathematical model of ear’s speech center

New research paves the way for modeling the transduction of speech and music at the cochlear level.

New research paves the way for modeling the transduction of speech and music at the cochlear level.

Until now, the part of the ear that processes speech was poorly reflected in computer models, but University of Michigan researchers have figured out the math that describes how it works. It could help improve hearing tests and devices that restore some hearing to the deaf.

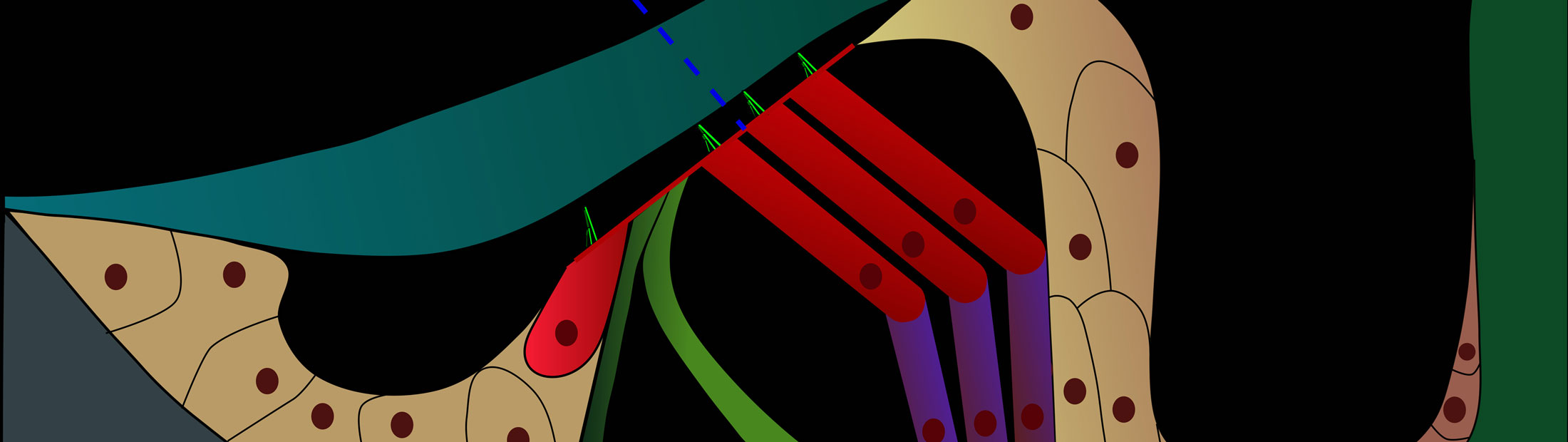

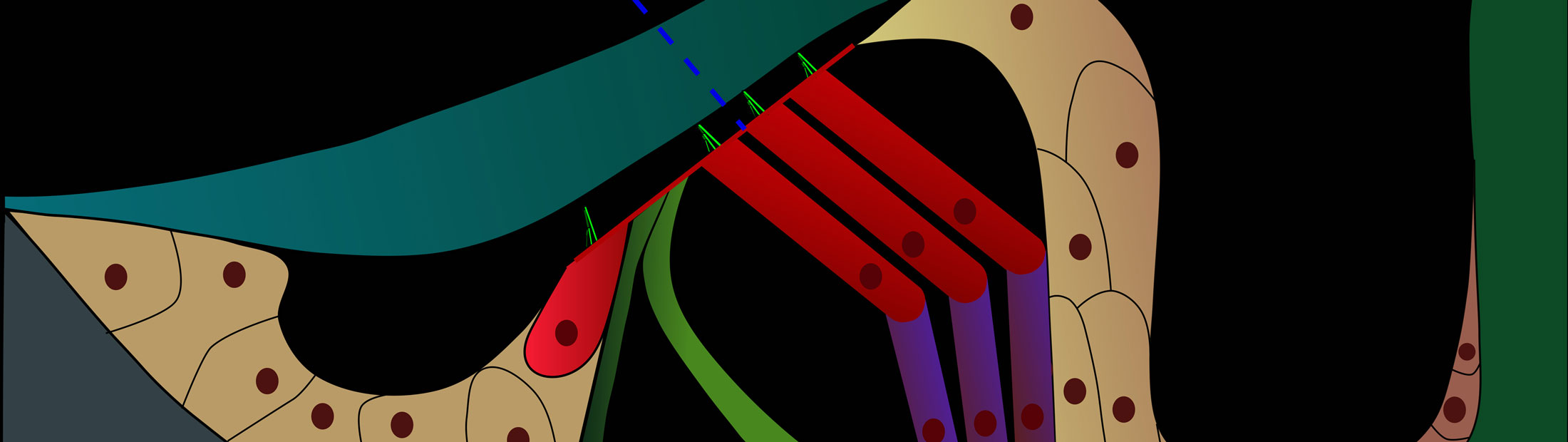

Inside the ear, a snail-shaped organ called the cochlea takes in pressure information from the eardrum and turns it into nerve impulses that are sent to the brain. A full understanding of how this tube-like structure does its work, from end to end, has been elusive.

“No one has been able to piece together a complete model that describes the entire cochlea, especially at the apex, or the end furthest from the eardrum,” said Karl Grosh, a professor of mechanical engineering. “Existing models were unable to match the low frequencies processed at the apex.

“And that’s been a problem since it’s where speech is processed.”

That apex has been problematic for researchers because it is tapered and features a different cell structure from the base. Due to its location further inside the ear, it has been harder to access for testing without doing damage.

Recent advances in optical coherence tomography (OCT), the use of light waves to create 3D images and measure how sound moves through different parts of the ear, have allowed for a closer look at the cochlea’s apex region.

Using OCT data from other researchers, Grosh worked with Aritra Sasmal, a PhD student in mechanical engineering, to break down the cochlea’s mechanics, fluid- structure interaction and cell makeup.

Their work sheds new light on the role of a particular part of the cochlea, the basilar membrane. It runs the length of the cochlea, separating two liquid-filled tubes; the scala media and scala tympani. Previous research has suggested that the membrane is the critical element to the ear’s ability to amplify and transmit sound waves.

But Grosh and Sasmal’s work shows the basilar membrane is only part of the equation. They showed that subtle changes in the cell structure along the cochlear spiral and the shapes of the liquid-filled tubes are key elements at speech frequencies. Their work is published online in the Proceedings of the National Academy of Sciences.

“Most numerical models work well at the base but fail miserably at the apex,” Sasmal said. “Our modeling work is the first to show why the apex behaves differently, and it paves the way for modeling the transduction of speech and music at the level of the cochlea.”

Our modeling work is the first to show why the apex behaves differently, and it paves the way for modeling the transduction of speech and music at the level of the cochlea.

Aritra Sasmal, a PhD student in mechanical engineering

The researchers believe their model is promising for improving the way newborns are tested for hearing impairment. One non-invasive procedure, typically done in the first days of life, sends two tones into the ear and records a third tone that is produced in response.

A better understanding of the cochlea’s function, particularly at the apex/low frequency region, can help better analyze the third tone, improving our understanding of the baby’s hearing.

Improved modeling for speech and music transduction could boost the performance of cochlear implants, devices that can restore speech perception for deaf people. This device takes in sound, mimics the electrical signal it would create in a healthy ear, and passes it on to the brain.

That mimicked signal is often a poor approximation of what a normal ear would create. Furthering our understanding of how the cochlea works could lead to better speech processing algorithms—giving cochlear implants and hearing aids the ability to reproduce sounds more accurately.

The paper on this work is titled, “Unified cochlear model for low- and high-frequency mammalian hearing.”

The research was supported by the National Institute of Health.

Grosh is also a Professor of Biomedical Engineering and an affiliated faculty of the Kresge Hearing Research Institute.